Introduction

The foundational framework for Citrini Research is the hunt for megatrends in markets – these are secular shifts with the potential to transform industries and the parts of society they touch. Meanwhile, we are also traders and, as such, attentive to entries. The best entry points, we believe, are often found at cyclical inflections. Those periods when a rebound from a “crash” or a “bust” is in progress but market sentiment remains depressed present fertile hunting grounds for new themes.

Ouster (OUST US) represents a perfect example, and so we were thrilled to have the opportunity recently to sit down with the company’s Chief Executive Officer and Co-Founder, Angus Pacala, and SVP, Chen Geng.

Our view is that Ouster is positioned squarely in the center of the mega-trend that is robotics and automation, which is why we included it in our basket for our most recent thematic primer, Humanoid Robots.

As the largest Western LiDAR provider, Ouster has carved out a leading position in the competitive LiDAR market. LiDAR stands for Light Detection and Ranging, and it is a remote sensing technology that measures distance by illuminating a target with laser light and then analyzing the reflected pulses. Ouster’s digital technology integrates LiDAR onto CMOS chips for cost and performance benefits with a diversification strategy across automotive, industrial, robotics, and smart infrastructure.

Ouster is targeting significant growth in all four verticals, and sees the market for LiDAR technology as a $70 billion opportunity, with expectations that the adoption of LiDAR outside of automotive will surpass that within the automotive sector.

The company anticipates substantial volume growth as customers transition from prototypes to commercialization. Overall, Ouster is optimistic about the potential for LiDAR to grow for decades, not just years, as it continues to tap into these emerging markets.

While maintaining a lean cost structure, Ouster is targeting 30-50% annual revenue growth and gross margins of 35-40%. The company has met or exceeded its guidance for nine consecutive quarters. Revenue is roughly evenly split among automotive, industrial, robotics, and smart infrastructure with notable clients such as John Deere, Komatsu, Motional, May Mobility, Forterra, and Serve Robotics. Ouster has $171M cash on hand as of 3/31 and burned ~$37M in 2025. Ouster’s consistent revenue growth and healthy gross margins puts them on a path to profitability, with a focus on maintaining healthy gross margins.

Please enjoy the transcript of our discussion with the company below:

The Transcript

Disclaimer: Our In Conversation series seeks to provide incremental information on companies we have discussed as potential beneficiaries of thematic trends. We reach out independently to management of companies with little to no traditional coverage to discuss their business qualitatively. CitriniResearch never receives compensation from any company we cover. This transcript was generated by audio-to-text transcription software and has been edited for clarity and brevity. It is presented as-is and may not reflect exact quotes or may lack context. We have curated the below text to highlight the most relevant aspects of our discussion. All emphasis has been added by CitriniResearch.

CitriniResearch

Thanks for joining us today. To start, for readers that are new to Ouster or were introduced by our recent primer, can you give us an overview of what Ouster does?

Angus Pacala

Sure. Ouster makes digital LiDAR sensors and software solutions. LiDAR sensors are basically the eyes of robots, the eyes of machines that need to move autonomously through their world. LiDAR stands for Light Detection and Ranging. It's similar in principle to radar or sonar or echolocation, where you bounce some sound or radio waves, or in the case of LiDAR, light waves off of objects, and you time how long it takes for the signal to return back, which allows you to calculate the distance of those objects from the source of the signal. So it's a 3D sensor that gives highly reliable information about the position of objects in the world.

LiDAR is the predominant sensor used in this new wave of physical AI because autonomous robots need to navigate the world hyper reliably and flexibly. So whether it's a robotaxi or an automated robot in a warehouse, or a humanoid robot or a traffic intersection, LiDAR is the best sensor for perceiving the environment, knowing where all the objects are, and giving the system information to decide what to do next.

The alternative to LiDAR is a camera, the traditional eyes of machines. And cameras working with AI have made immense strides in their capability. But even with all of the advances in AI and processing, LiDAR is still superior to cameras in terms of reliability and precision of measurement. In fact, all of the advances in AI supporting cameras also apply to LiDAR.

So, Ouster builds LiDAR sensors. We actually are the largest western provider of this technology, and we are the inventors of what we call digital LiDAR technology. Digital LiDAR is the idea of putting the complexity of a LiDAR system onto a silicon chip. This allows our products to ride the wave of Moore’s law in terms of performance improvements, similar to what has happened over time in other silicon-based products, whether that is CPUs, GPUs, or camera technology. Ouster developed the first LiDAR that put the LiDAR sensor onto a custom silicon chip in 2018 and we still design all our silicon in-house today. The benefit of Ouster's digital LiDAR technology is that it improves exponentially like other semiconductor-based products. Digital LiDAR sensors are also more affordable, more flexible, and more compact and robust. We can make smaller sensors that are more power efficient and more capable.

This versatility becomes supremely important when you are trying to put a sensor like LiDAR everywhere. And LiDAR really is going everywhere. We founded the company in 2015 on the thesis that autonomous machines were going to be everywhere and outlined a commercial and product strategy to capture this diversity of applications. At that time, there was a lot of hype around specifically robotaxis and autonomous vehicles. But we saw that actually this was the start of a broader trend in physical AI, and literally every moving machine on earth was going to end up being robotic. So we positioned Ouster early on to play in multiple verticals. We made sure we could serve the automotive, industrial, robotics, and smart infrastructure verticals as they moved towards autonomy. Over the last 10 years, that has really started to play out, and we are benefitting from that tailwind as a company. We have Jensen Huang standing on stage at GTC saying “Everything that moves will be robotic someday, and soon.”

CitriniResearch

“The era of robotics is now,” I think he also said. So that's a perfect segue. So you basically serve three areas: automotive, smart infrastructure, and robotics. Do I have that right?

Angus Pacala

Well, we put it in four buckets. There is automotive, smart infrastructure, robotics, and industrials. The difference between robotics and industrials is that robotics encompasses humanoids and these futuristic emerging use cases. Industrials involves applications in things like agriculture, construction, mining, warehousing and logistics – think autonomous tractors and forklifts.

CitriniResearch

Can you sketch out the size, growth rate, and design cycle lengths for your different verticals?

Angus Pacala

Sure, I can speak to automotive and industrial verticals. But stepping back to talk overall growth rates for a minute, two years ago we put out a long-term financial framework on a path to profitability that entailed 30-50% annual revenue growth, 35-40% gross margins, and holding our operating costs constant. In the last nine quarters we have hit all those targets. We have actually had nine consecutive quarters of revenue growth, and we are now hitting our margin target. We grew over 30% in 2024, and we see growth staying in that 30-50% range as we approach profitability.

When you look at the sub-verticals, consumer automotive has been very slow to adopt LiDAR overall due to the technical difficulty of the problem, and to some degree due to the lack of conviction from automakers on how to roll out automation and autonomy in their cars. So to date, the revenues have not materialized and this is why Ouster diversified early on. It’s also a reason why some other LiDAR companies are struggling right now–because they focused too much on just consumer automotive.

We serve the robotaxi industry, where there has actually been a strong uptick in interest. Waymo has proven the model. We have a great partner in May Mobility that's now signed publicly with Uber to get onto their program and is expanding significantly in the United States. We have our customer Motional, a Hyundai company, continuing to invest in their robotaxi effort. So we actually have some tailwinds there, and we also have a number of customers in the robo-trucking market.

I’m very bullish on the industrial use cases because we’ve been working with these customers for many years and I’m seeing tranches of them move to the next stage of development or in some cases, full production. Customers like John Deere, customers like Komatsu, who we just announced in the last quarter. We've been working with them for three to five years. They have a narrower technical problem to solve, they have a clear ROI for the automation they are developing, and they’ve stayed focused.

So we have a lot of pans in the fire with these industrial customers. As they move from R&D into production, their volume kind of hits a light switch. So we'll see, you know, tens or hundreds of units of volume per year for R&D go into thousands, moving into tens of thousands of units of volume.

CitriniResearch

I want to go a lot deeper into the different problems that you guys are solving. Before we get into that, can you give us the origin story of how you guys became a company, how you guys became public?

Angus Pacala

I founded Quanergy Systems in 2012, which was one of the first competitors to Velodyne, the inventor of 3D LiDAR. At Quanergy, I was the director of engineering, brought a product to market, and Mark Frichtl was the first employee at Quanergy. Mark and I left Quanergy and co-founded Ouster in 2015. Ouster went public in 2021, and acquired Velodyne in 2023.

The premise for Ouster was to build digital LiDAR sensors and bring LiDAR into the modern age by integrating LiDAR technology onto low-cost CMOS chips, and to diversify across industries.

Those are really the two founding theses and the latter was based on this idea of everything going robotic. Even at that time we were looking around at all these robotaxi companies and we're like, ‘the one truth is that all these companies are going to be late on their timelines.’ Mark and I are both engineers, and we know that it’s notoriously hard to accurately predict timelines when working on hard problems. So we figured that all these other companies – our potential customers – would not be able to really, truly predict their timelines to market. So we needed to build a company that was resilient to potential delays, and also pursuing a greater overall TAM given every other LiDAR company was only trying to build LiDAR for automotive uses.

There were a lot of LiDAR companies that were started around that time. I remember investors telling me they were tracking over 100 LiDAR startups in the 2018 timeframe. So it's pretty crazy. We were able to get to market with a product before most of our competitors, and then we iterated relentlessly from there, improving our products over time. One of the things we've done best in this industry is core technology development.

Ouster, along with a lot of other LiDAR companies, went public through SPAC in 2021. We were a public company overnight, and we had to become more mature in our processes, business systems, and the customer base. In early 2023, we merged with Velodyne with a thesis built on considerable operational synergies, an extensive IP portfolio, and a significantly stronger financial position. We have executed really well on that thesis, with 9 consecutive quarters of revenue growth, a cost structure that is roughly half of the prior standalone companies, and substantial progress on our long-term financial framework that’s put us in a position to become profitable. We also have one of the strongest balance sheets in the industry, with $171million of cash at the end of the first quarter, and a significantly reduced burn rate. So that's how Ouster got to where it is today.

In terms of commercial scale, we are the largest western provider of this technology and a major player globally, with over 1,000 end customers. Last year we did $111 million in revenue and shipped over 17,000 sensors.

There's two companies out of China, Hesai and Robosense, that generate more revenue but rely heavily on the Chinese automotive market. We have a diverse customer base and a lot of name brand customers now using our products like John Deere and Komatsu, and we just did a press release with Nvidia on Physical AI. So a lot of good stuff going on there.

CitriniResearch

You spoke a little bit about how it's more difficult to have a self-driving car on city streets than it is to have an autonomous tractor. When it comes to robotics or humanoid robots specifically what kind of problems do those robots have and what do you solve for those robots?

Angus Pacala

Mobile robots like a humanoid robot or our customer Serve Robotics (SERV US) that does delivery on public streets, they all need to navigate and interact with cluttered, diverse, and novel environments. Part of any of these companies' core business models is that the robot can navigate and not bump into stuff and definitely doesn't hurt anyone.

So this concept of not hurting anyone, this is a new breed of robotics that interacts in and around humans. That’s not what robotics has been in the past. When you think robots, most people think of an automated car factory with huge robotic arms, laser safety screens, and no humans in sight. That’s because these machines are dangerous and they have no perception to know, to sense where a human is and not hit them. So you just keep all the humans away. This is a different thing. There are humans everywhere interacting with these robots.

The business model of all these companies is that you can buy this robot. It’s going to fold your laundry in your house and interact with your kids and it cannot make one mistake, you cannot hurt someone that has bought this robot. It's a company-ending issue. So the requirement on perception and safety is at an extreme level. It's a perfection requirement essentially. So that's where the eyesight of these robots, the perception system of these robots is so critical. If you have a human in the loop like Tesla Autopilot, you can make a mistake every couple hours or every couple of days with a camera and AI system. It's a good success rate for cameras and AI. Once every few days it makes an error but you have a human in the loop backing it up.

With humanoid robots, you don't get that chance. Humanoid robots can never run into your toddler. It really is a “never” situation. LiDAR provides that guarantee on sensing coupled with all kinds of advanced AI algorithms. Fundamentally it measures exactly what you care about, which is the 3D environment, with a very direct method. Shoot a laser at it, bounce it off and use the speed of light to determine the distance. So what we're providing is a guarantee on sensing and we're providing a more affordable, more capable LiDAR sensor than our competitors in this space.

CitriniResearch

Going a little further into the different kinds of customer profiles that you have and their design cycles. Like a car manufacturer thinks in model years and then a DOT project is going to think in grants and then Serve Robotics, for example, went from pilot to 2000-unit purchase order in like 24 months. How do those cadences shape your R&D and inventory bets?

Angus Pacala

We talk with customers all the time and one of my roles, which is a shared role between me and my co-founder Mark, is to be the Chief Product Officers of Ouster and to define the roadmap. We always have a view on the next product that we're releasing. There's a diversity of requirements and we think about our product roadmap as expanding layers of capability with no regressions to our customer base. That's an iterative kind of development process that is really powerful if you can achieve it. The next generation is better and better or equal in every way. We've held to that and what it allows us to do is identify that next layer of the onion.

If there are specific customer requirements that we aren't hitting right now that they want in the next generation, we can build that new layer of the onion. We have a pretty unified architecture and product portfolio. We have a lot of SKUs and configurability in what we ship to customers. But the core hardware and technology that we develop is quite unified and we just focus on expanding its overall capability. That's changing a little over time. Like I said, we're going to have some more product families coming out, the DF family, which is this flash solid state LiDAR family that's in development, that'll be a new architecture to go alongside our spinning scanning OS sensors.

But in any case, yeah, we survey all the customers and we build to this ever expanding requirement set.

CitriniResearch

And solid state, that's just fixed and not moving?

Angus Pacala

It's fixed, not moving like our OS sensors where there's a spinning component so it can see in 360°, which is a great feature for most customers. There are some customers that want a more tightly integrated product, so you put a sensor that looks in a particular direction in the body panel of a car or the chest of a humanoid robot and then it just looks in one direction. So that's the DF sensor.

CitriniResearch

Can you translate some of the KPIs that you watch? Like, what does MTBF (mean time between failures) at 5000 hours mean for a warehouse robot? And how does that shift for a robotaxi or a smart intersection or a humanoid robot?

Angus Pacala

So there's the reliability of the data stream coming off the sensor, i.e. can you measure the distance to the refrigerator door consistently? LiDAR gives you that. Then there's product reliability, can it last a lifetime? Robotics is easier in a lot of ways. The requirements are potentially lower than the heavy machinery industrial requirements that Deere's equipment needs to last in an agricultural field that's super hot, dusty and has a lot of shock and vibration for 10 years. So their requirement would be above the kind of the lifetime requirement of a humanoid robot operating in a house. But you know, there's some give and take there.

Humanoid robots can fall down and have huge shockwaves. So there are some real nuances in the engineering of sensors that go on platforms. Broadly speaking, the MTBF, the time to failure requirement for industrial equipment is far longer than this wave of robotics that is going into people’s homes or onto factory floors, might be 5 years versus 10 years for industrial machinery.

CitriniResearch

So if you were going to give us a rough cut of 2024, your revenue between auto and infrastructure and mobile robotics and industrial, which sub-vertical drives the highest incremental gross profit every time you book another $10 million in revenue?

Angus Pacala

On the revenue split in 2024, we had roughly even split across all four verticals, it's a good thing. They're all growing. We have not given a breakdown of margin by vertical. If you think of this in terms of ASPs and the cost appetite of the different customer sets. They're wildly different, right? A giant industrial machine, a hauler in a mine might be a ten million dollar machine. And then you have a humanoid robot that a person needs to buy and that might be a ten thousand dollar device.

There are a lot of robots like a robotic lawnmower might be a thousand dollar machine. So, the perception payloads need to scale down with the cost of the product that's being provided to the end customer. So you can really see those orders of magnitude. It's indicative of how the sensor payloads and the profitability of these different spaces might play out. We're in pretty early days of optimizing for profitability in any individual vertical. For instance, we sell the wide field of view OS0 LiDAR sensor to our robotics customers and to our industrial machinery customers.

So it's wildly more capable in many ways than it needs to be for operating in an indoor environment because industrial machines are operating on public streets and in mines and in fields and it's just one product. There is an opportunity for us to improve performance and reduce cost for the robotics industry, but it's just not something that we felt is needed quite yet. It's a natural place for us to go with our product roadmap.

CitriniResearch

So if you were going to give a couple examples of end products that your sensors go into, how many sensors would go into a robotaxi? How many sensors go into smart intersections?

Angus Pacala

So a lot of the number of sensors on a platform comes down to how big it is because of the field of view of the sensors. So a big mining machine might have seven sensors on it. A robotaxi like May Mobility for instance has five Ouster LiDARs on it, a top mounted long range and four surround view. That's a pretty standard robotaxi configuration. The requirement there is that there are no blind spots anywhere because you can't crash a car, right? You have to be able to see someone leaning up against the car right next to it. So that's five.

We actually have a lot of robotic forklift customers that have five sensors on the robot as well, just because of the field of view requirements of seeing a pallet being picked up by the forks. Then forklifts actually drive forward to pick something up and drive back after you've picked up the load. So you need a field of view in all these different directions. Then something like Serve or a humanoid robot. These are getting smaller in scale, lighter-weight. So the safety requirements are a little more relaxed. They might only have one LiDAR sensor. Serve Robotics has one LiDAR on every unit and our humanoid robot customers have one of our spinning LiDARs on every unit.

As we move into more performant, lower cost LiDARs that we could potentially release, a lot of those are moving to solid state. They don’t have the 360° field of view of our scanning sensors. You might have four solid state LiDARs on the body of a humanoid robot or on the four sides of a Serve robot. But I think roughly the combined ASP of those four sensors might equal one spinning LiDAR.

CitriniResearch

How does Ouster differentiate from other Western LiDAR companies (Luminar, Innoviz, Aeva, etc.)?

Angus Pacala

First, digital technology. Ouster is built on silicon CMOS that leverages Moore's law and supports a single digital architecture across the entire product portfolio. Second, AI Software. Our Gemini and Blue City software solutions leverage AI models to detect, classify, and track objects to deliver actionable insights for our customers. And third, diversified and proven business. Ouster is nearing ~$140M annualized revenue run-rate, with gross margins of ~40%. We sell into 100+ use cases across four primary verticals - Automotive, Industrial, Robotics, and Smart Infrastructure. Our peers generate de minimis revenue with significantly negative gross margin, their products are largely not commercially validated, and focus only on the consumer auto market.

CitriniResearch

I was reading through some of your product stuff and something that stood out to me is this in-sensor safety fence firmware. Can you talk a little bit about that? Which sub-vertical do you think adopts it first and how are you thinking about attach rates with that?

Angus Pacala

This is part of that product roadmap of ever expanding capability. We announced 3D zone monitoring and it's an on-sensor firmware upgrade to all of our REV7 sensors. So it comes free. All customers can take advantage of it who have bought a REV7 sensor. It adds the ability to define a region in 3D space around the sensor, a zone, think of it as a 3D box in front of you. The sensor will then set up triggers for when objects go into that zone, or different criteria that you can configure. Then the sensor will alert the client or whatever is ingesting the sensor data about zone occupancy or lack thereof.

You can have a bunch of tuning, so it's a simple form of perception that has existed on industrial-style sensors for a very long time but in a much simpler form. The way this is used commonly is in warehousing robotics like an automated forklift that has two perception systems running. The first is the hyper-advanced AI-based sensor system that gives it really nuanced behavior to navigate an aisle and safely decelerate and classify humans so that it moves more slowly to give a sense of safety. It's not actually in most cases a safety certified perception system. It's a long process. Most customers want to avoid that.

So they have a secondary redundant perception system that is far simpler. That’s what our on-sensor zone monitoring provides to customers. Ouster is putting in all the effort to safety certify that secondary zone monitoring system running right on the sensor. It can say there's an object right in front of you. Now it's really close. Now you need to emergency stop. So it's a really good way of guaranteeing you're not going to hurt someone, and its of extreme value to customers because they can avoid building it all themselves.

CitriniResearch

Can we speak about the different technologies and product options available in LiDar right now? I’ve been reading a bit about SPAD, MEMS and FMCW.

Angus Pacala

It's a bit of a mix and match. There's theories of operation like time-of-flight, there's scanning mechanisms to move a laser beam around the scene, and then there's detector technologies like what is registering photons that are bouncing back to you. Almost all LiDARs are what are called direct time-of-flight LiDARs, meaning they pulse out light, start a stopwatch, light bounces off an object, and it comes back to the receiver and you stop the stopwatch. Super reliable, simple, and it works really well. There is one other option which is called FMCW (Frequency Modulated Continuous Wavelength) LiDAR. It's a niche product that's unproven in the market and uses a more radar-like technology akin to Doppler shifting. It's an unproven technology that has a bunch of drawbacks. The “easy path” in LiDAR is direct time-of-flight. You can just hammer on the core performance attributes of direct time-of-flight LiDAR, and that's what most customers seem to want, and that's what Ouster does. On scanning mechanisms if it's a solid state device there's no scanning, it's just flashing the scene. That's what our DF sensor does. You can have a Raster scan where you have a bunch of oscillating mirrors like MEMS mirrors that Raster scan a laser across the scene.

Or you can spin a LiDAR like Ouster’s OS sensors. Spinning is great, customers want them and having a 360-degree panoramic 3D image of your scene is valuable for the majority of customers. You need to see in a forklift when you're driving forward and back and turning side to side.

Then there's the detector. Ouster uses SPADs, that's what you mentioned, that's single photon avalanche diodes. SPADs are basically a detector type that can be built in standard CMOS silicon. So we're a digital LiDAR company and we're actually the first company to commercialize a SPAD LiDAR because the two go hand-in-hand. There are some other technologies you can use for detectors, but they're not compatible with standard CMOS so that's a huge drawback. That means you can't put 100 million transistors right next to that SPAD to do all kinds of advanced signal processing, which is what Ouster does. And so it makes for less capable systems overall.

CitriniResearch

I think that this is a good segue that Nvidia's boards are handling perception and control in a single system on chip. Do you have a roadmap to push ROS two-point cloud filtering into your sensor to offload compute?

Angus Pacala

One of the great things about Ouster's roadmap and LiDAR roadmap in general is that we have a great example in cameras on what a technology roadmap could look like. Cameras in the last 15 years have gone from a novelty to ubiquity. Cameras went through a transition from the first digital cameras just needed to improve in raw resolution and SNR (signal to noise ratio). That’s kind of still where LiDAR is today. We want to improve the raw characteristics of the point cloud. Cameras in the last five years are now putting edge AI perception directly into the camera modules. So edge AI, whether it's an Nvidia chip or some other edge processor being used, you're building smart edge cameras.

I think LiDAR will naturally move in that direction, but I think it's another five years before we directly embed that capability into one of our products. We're still in the world where it makes more sense to improve just the LiDAR and the point cloud and then have a host computer do all the processing. But yeah, I look at what has happened with cameras and see it as kind of the obvious blueprint for where LiDAR is going.

CitriniResearch

That's interesting. I like that. So, and then Nvidia, like we mentioned at the beginning, right? They're kind of this gravity well for robotics compute with Jetson and Isaac Sim, so how deep is your partnership with Nvidia today? And what milestones make that a strategic partnership rather than tactical.

Angus Pacala

The first thing I’ll say about Nvidia is why are they doing so well? Their chips are deeply entrenched in these customers. Let’s set aside graphics cards where a consumer can buy a different graphics card every year. All their industrial, robotics, automotive customers, they have the same dynamic as Ouster’s customer base because they’re all the same customers. They have three-to-five year development cycles, huge investment in building a perception system around an Nvidia chip. And the corollary, it’s the same cycle to build something around an Ouster LiDAR data stream. We have a thousand end customers and immense inertia in that customer base moving towards production. These customers do not turn on a dime.

So it’s really important that we got out first and started working with customers like John Deere many years ago because of the inertia that’s inherently built into systems. That gives me a lot of confidence on where Ouster is going commercially because we are tapping into this same dynamic that Nvidia has been benefiting from by getting their chip into all these systems with the same inertia. We just announced last month how Ouster has been working with Nvidia’s chips and technology to bring physical AI to smart infrastructure.

It’s a product that Ouster has built called Blue City, a smart traffic control solution that changes the lights at an intersection far more efficiently and affordably and accurately than ever before. So we bring two LiDAR sensors and an Nvidia GPU to the poles at an intersection. That system runs a deep learning model on the live sensor streams to perceive everything in the environment and track it and make predictions. So it's like an omniscient perception system, very similar in architecture to what an autonomous vehicle would be doing, and hyper-capable, hyper-reliable. We’ve trained it on over 4 million annotated objects in LiDAR data that we’ve collected over the last couple years.

We have a huge training data set and validation set to prove that we can classify a person, a car, a bicyclist, a motorcyclist, a big truck, and a small truck in all kinds of diverse environments and weather. The whole thing is built on the Nvidia compute architecture and all of the tooling that has been built up around deep learning, things like Pytorch which is built on CUDA, that investment has paid off. We announced we’re deploying Blue City at over 100 intersections in Chattanooga to run and automate all of their downtown corridor with a superior traffic system.

So people in Chattanooga should experience less time waiting at lights. They should experience much more intelligence about things like left turn signals staying on for the right time because there are more cars there. For the first time, a system can detect people waiting or entering the crosswalk. Your crosswalk has just been a simple timer for all of history. Now it’s going to be something that’s intelligent, that gives you more or less time, depending on if you got through the crosswalk in time or not. So that’s been a big, great success story with working with Nvidia.

Nvidia has all these other tentacles into other verticals, like industrials, warehousing, robotics and automotive. We have all our sensors supported in Nvidia drive, the Nvidia Omniverse, and on the Jetson platform. We’ve made sure that we’re collaborating everywhere where you could break Nvidia’s verticals up into the verticals that Ouster is playing in. We’ve made sure to have this tight collaboration on getting our sensors supported by all these suites that they provide to their end customers. So it’s been fun.

CitriniResearch

When it comes to humanoids are there any differences in what use case they're trying to test or spec tweaks that have surprised you?

Angus Pacala

There's all kinds of interesting nuances. Humanoid robotics needs incredibly fine levels of perception for manipulating objects in a robot's hands and navigating the very tight spaces of a house. So the requirements are pretty different than a lot of the sensors that we offer today. We have plenty of time to develop the perfect fit if there's a good opportunity.

Sensors we make are still built to see hundreds of meters out and down to zero minimum range. That's completely overkill for these use cases. So there's a lot we can do to constrain the cost and performance to go in a humanoid robot. We have great market intelligence on what would be needed to place our bets in the right places. A bet is the probability of the whole technology working and the timeline that it works on. For me, humanoids are still in this iffy spot, kind of like the 2015-era for robotaxis.

I know that humanoid robots are going to work on some timeline, but I'm not an oracle. I really think it's going to be later than we all hope as they get into all the edge cases because again, these things have to be close to perfect. Perfect on the safety side and close to perfect on, do they fold your laundry right?

So, we have approached the humanoid market with a bit of hedging on how much of that materializes and just acknowledging it's going to be a long R&D cycle. We will get a lot of pans in the fire just like we did five years ago within industrials and that's potentially a great business strategy too.

CitriniResearch

Do you have any thoughts on China’s role and the cost curve for these?

Angus Pacala

I think cost reductions are going to be there very soon because there's a million Chinese companies doing this. Don’t bet against them to bring the cost down. I think Unitree's humanoid robot is already the price of a used car.

CitriniResearch

So, two of your biggest competitors, Hesai and Robosense are both in China. What do you see? What are the risks and the opportunities there for Ouster?

Angus Pacala

There's a similar dynamic where the Chinese are going to cut costs in a technology set and price more aggressively than western companies. That's pretty much true across any industry. For LiDAR, and the industries that we play in, it's not all about price. Performance, reliability, security, and customer support are incredibly important.

Ouster has top-of-the-line performance. The reliability of a vendor and the support that they give and the ease of use, the ease of development, the software provided, the core quality of the product and lifetime are incredibly important to a customer—they become less price sensitive once you hit a price that makes their autonomy stack commercially viable. They're not trying to eke out the next hundred dollars necessarily so much as they're trying to eke out another year of lifetime, another year on the warranty, improved field application support and a better set of software tools to offset software development costs. So that changes the dynamic versus the humanoid robotic space.

For consumer technologies where there's a very low barrier to entry, price and cost is everything. Once the software stack is working, it's just all about cost because consumers feel the pain in their wallet. So Ouster right now is not operating in businesses that have the dynamics of consumer electronics and consumer goods. The closest we get is cars but LiDARs aren’t in consumer cars in the western world. So we're not really in that space yet because it's not ready yet. That's where price comes into play because consumers feel the pain in their wallet, but there are still significant barriers to entry or ways to be extremely competitive outside of price because cars are safety critical.

Certified devices and supplier relationship is really important for an OEM. Geopolitics for a lot of these applications does matter for US carmakers, they need to think about where their supply chains are coming from and in some cases are going to prefer US or a Western supplier over a Chinese one. As a US supplier, geopolitics and this kind of dynamic create a preference for us. Likewise, that makes it easier for Hesai to sell to Chinese companies. The world has kind of been carved up on a lot of these critical emerging technologies.

You look at Nvidia GPUs and they're constrained in where they can sell their products now. So definitely it's affected the entire landscape and the competitive dynamics.

CitriniResearch

And so for your own supply chain, are you relatively insulated from China? I saw you said that 10% tariffs are manageable. Where do you produce most of your components?

Angus Pacala

We saw the shift in geopolitics a couple years ago and we started divesting our Chinese supply chain just knowing that would probably be something that was preferred by a bunch of our customers. We also work with DOD customers, so we made it a strategic initiative inside Ouster.

We manufacture in Thailand, which right now is a 10% tariff, and at this point it's an immaterial impact to Ouster's business, which is what we said on the earnings call.

CitriniResearch

I saw that your Q1 25 gross margin was about 41%. Can you walk me through—where do you think the next 500 basis points, which cost buckets move first and what do you see accelerating that curve?

Angus Pacala

We’ve staunchly held to a 35 to 40% target that we put out in our financial framework. We see tailwinds potentially from product roadmap innovation, cost down of the product itself, overhead absorption, and the growth of our software attached business which is margin accretive to the business. Our Blue City and Gemini sales have a higher margin on average than our sensor-only sales, which is exactly where you want to be and why we’ve invested so much in this area.

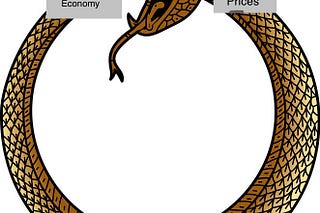

We see these margin tailwinds, but there's also the headwinds of customers going into production at lower ASPs and higher volumes. We know that ASPs are going to drop over time. That’s true of any healthy business that drives significant unit expansion.

So, certainly it’s a goal of mine to expand our margins beyond where our framework is. It’s possible Smart Infrastructure software solutions grow at a faster rate than the rest of our verticals, and then we could see some margin expansion. But also I’d be happy to see our industrial accounts 10x their unit volumes and trade off a little margin.

CitriniResearch

And so at what quarterly shipment volume would you expect to see break-even?

Chen Geng

Our sellside analysts are in the late 26, early 2027 time frame. We don't provide guidance on when we expect to reach profitability, but we do provide a financial framework to build your own assumptions.

CitriniResearch

So, an auto customer might demand a 10 year warranty and then a city might have a service deal and then you might have sensor swap subscriptions with an OEM. If you were going to rank the three on lifetime gross margin dollars how would you approach that or how should we think about that?

Chen Geng

It's really customer by customer across the verticals. Some customers are buying on a spot basis, some have multi-year contracts with extended warranties that last multiple years. It's hard to generalize by vertical. There are certain applications that require uptime. For example Blue City, we are supporting traffic intersections, we can't just shut off our service and leave a city without the ability to monitor. So for those software sales, those are perpetual licenses versus other applications where we can sell them on a recurring annual basis.

CitriniResearch

I have a last question about looking out to 2030. How do you expect your end markets to evolve and what would you be looking for to say that you won the opportunities that you're going after?

Chen Geng

We see our market as a $70-billion opportunity and our guidance for the second quarter is $32 to $35 million of revenue. We're just beginning to tap into the opportunity and one of the things that we get asked—what inning are you in? I don't think we're actually in an inning. We're still in the locker room putting on our cleats, still stretching it out. It's very early stages in terms of the adoption for LiDAR. In five years we'll continue to see our customers move from prototypes to commercialization. We'll continue to drive volume growth, continue to drive new products. I think 2030 is still relatively early in the adoption of LiDAR.

We certainly don't feel constrained in terms of our growth. I think LiDAR continues to grow for decades, not years.

CitriniResearch

Is there any specific area that you would say you guys are most excited about?

Chen Geng

We sell LiDAR all the way from the mine or the farm to the warehouse, to the delivery trucks, to the last mile delivery robots. Anything that has the ability to take data and be spatially intelligent and turn that data into actionable insights is an opportunity for LiDAR. That's what's so exciting about Ouster as a company, and what's so exciting about LiDAR as an opportunity, is it's a technology that has truly global ramifications and Ouster is at the forefront of that revolution.

CitriniResearch

And then the last question we ask every company: what did we fail to ask that you wish investors understood about Ouster?

Chen Geng

Investors generally don't have as much clarity on the non-automotive opportunity. That's what we've really focused on over the past few years is trying to help people understand that the LiDAR outside of automotive is larger than automotive itself. There's significant end markets with multiple million units of potential, with the ability to adopt LiDAR at a faster rate than automotive and potentially bring better economics than automotive.

Thanks Citrini! I am not sure if you remember, but you made a comment here in the chats (more than a year ago) when OUST was around $8-ish and you first bought in that it could 100x. I do not take these notes lightly (even though I am aware that these are always just ~1% of citrindex) as I have not seen you make similar comment with such conviction before. So I bought at those levels with much more than 1% of my portfolio :D and held ever since - even though you exited the position in the interim. It was good to see you re-enter with the Robotics Primer! Cheers!

Excellent…More of this…Differentiated, long-term perspective with undiscovered companies is a great niche…