The Prompt Engineering Playbook for Programmers

程序员的提示工程手册

Turn AI coding assistants into more reliable development partners

将 AI 编码助手转变为更可靠的开发伙伴

Developers are increasingly relying on AI coding assistants to accelerate our daily workflows. These tools can autocomplete functions, suggest bug fixes, and even generate entire modules or MVPs. Yet, as many of us have learned, the quality of the AI’s output depends largely on the quality of the prompt you provide. In other words, prompt engineering has become an essential skill. A poorly phrased request can yield irrelevant or generic answers, while a well-crafted prompt can produce thoughtful, accurate, and even creative code solutions. This write-up takes a practical look at how to systematically craft effective prompts for common development tasks.

开发者越来越依赖 AI 编码助手来加速我们的日常工作流程。这些工具可以自动补全函数,建议修复错误,甚至生成完整的模块或最小可行产品(MVP)。然而,正如我们许多人所体会到的,AI 输出的质量在很大程度上取决于你提供的提示质量。换句话说,提示工程已成为一项必备技能。措辞不当的请求可能会产生无关或泛泛的答案,而精心设计的提示则能生成深思熟虑、准确甚至富有创意的代码解决方案。本文将实用地探讨如何系统地为常见开发任务设计有效的提示。

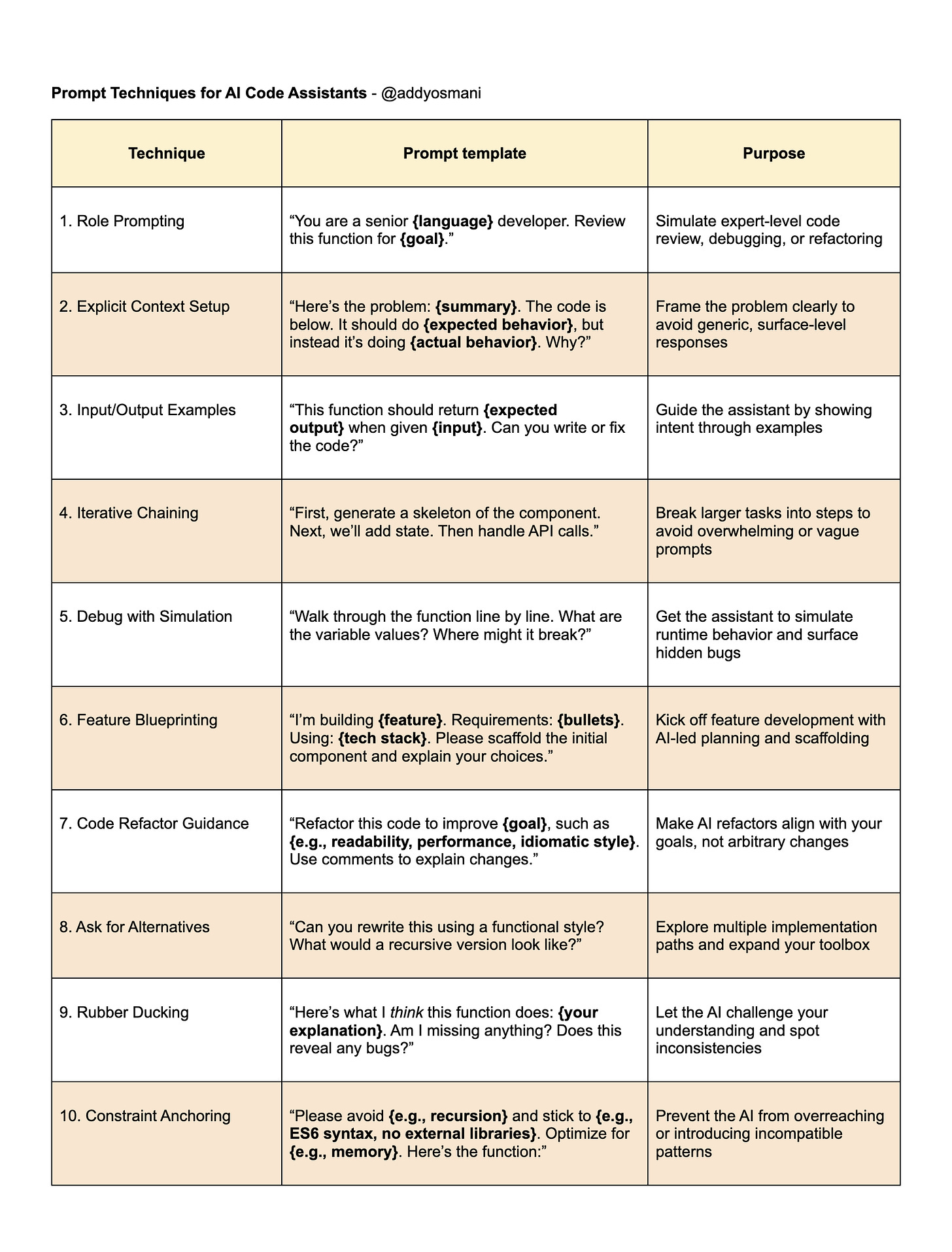

AI pair programmers are powerful but not magical – they have no prior knowledge of your specific project or intent beyond what you tell them or include as context. The more information you provide, the better the output. We’ll distill key prompt patterns, repeatable frameworks, and memorable examples that have resonated with developers. You’ll see side-by-side comparisons of good vs. bad prompts with actual AI responses, along with commentary to understand why one succeeds where the other falters. Here’s a cheat sheet to get started:

AI 配对程序员功能强大,但并非神奇——它们对你的具体项目或意图没有先验知识,除非你告诉它们或作为上下文提供。你提供的信息越多,输出效果越好。我们将提炼关键的提示模式、可重复使用的框架以及令开发者印象深刻的示例。你将看到好提示与差提示的并列对比及实际 AI 响应,并附有解说,帮助理解为何一个成功而另一个失败。以下是入门速查表:

Foundations of effective code prompting

有效代码提示的基础

Prompting an AI coding tool is somewhat like communicating with a very literal, sometimes knowledgeable collaborator. To get useful results, you need to set the stage clearly and guide the AI on what you want and how you want it.

提示 AI 编码工具有点像与一个非常字面、偶尔有知识的合作者交流。要获得有用的结果,你需要清晰地设定场景并指导 AI 你想要什么以及如何想要。

Below are foundational principles that underpin all examples in this playbook:

以下是支撑本手册所有示例的基础原则:

Provide rich context. Always assume the AI knows nothing about your project beyond what you provide. Include relevant details such as the programming language, framework, and libraries, as well as the specific function or snippet in question. If there’s an error, provide the exact error message and describe what the code is supposed to do. Specificity and context make the difference between vague suggestions and precise, actionable solutions . In practice, this means your prompt might include a brief setup like: “I have a Node.js function using Express and Mongoose that should fetch a user by ID, but it throws a TypeError. Here’s the code and error…”. The more setup you give, the less the AI has to guess.

提供丰富的上下文。始终假设 AI 对你的项目一无所知,除了你提供的信息。包括相关细节,如编程语言、框架和库,以及具体的函数或代码片段。如果有错误,提供准确的错误信息并描述代码应完成的功能。具体性和上下文决定了模糊建议与精确、可操作解决方案之间的区别。实际上,这意味着你的提示可能包含简短的背景介绍,比如:“我有一个使用 Express 和 Mongoose 的 Node.js 函数,应该通过 ID 获取用户,但它抛出了 TypeError。以下是代码和错误信息……”。你提供的背景越多,AI 猜测的空间就越小。Be specific about your goal or question. Vague queries lead to vague answers. Instead of asking something like “Why isn’t my code working?”, pinpoint what insight you need. For example: “This JavaScript function is returning undefined instead of the expected result. Given the code below, can you help identify why and how to fix it?” is far more likely to yield a helpful answer. One prompt formula for debugging is: “It’s expected to do [expected behavior] but instead it’s doing [current behavior] when given [example input]. Where is the bug?” . Similarly, if you want an optimization, ask for a specific kind of optimization (e.g. “How can I improve the runtime performance of this sorting function for 10k items?”). Specificity guides the AI’s focus .

明确你的目标或问题。模糊的提问会导致模糊的回答。与其问“为什么我的代码不工作?”,不如明确你需要什么样的见解。例如:“这个 JavaScript 函数返回了 undefined,而不是预期的结果。根据下面的代码,你能帮忙找出原因并修复吗?”这样更有可能得到有用的答案。调试的一个提示公式是:“预期行为是[预期行为],但在输入[示例输入]时却表现为[当前行为]。错误出在哪里?”同样,如果你想要优化,应该具体说明想要哪种优化(例如:“如何提升这个排序函数处理 1 万条数据时的运行性能?”)。具体性能引导 AI 的关注点。Break down complex tasks. When implementing a new feature or tackling a multi-step problem, don’t feed the entire problem in one gigantic prompt. It’s often more effective to split the work into smaller chunks and iterate. For instance, “First, generate a React component skeleton for a product list page. Next, we’ll add state management. Then, we’ll integrate the API call.” Each prompt builds on the previous. It’s often not advised to ask for a whole large feature in one go; instead, start with a high-level goal and then iteratively ask for each piece . This approach not only keeps the AI’s responses focused and manageable, but also mirrors how a human would incrementally build a solution.

将复杂任务拆解。当实现新功能或处理多步骤问题时,不要将整个问题一次性输入到一个巨大的提示中。将工作拆分成更小的部分并进行迭代通常更有效。例如,“首先,生成一个产品列表页面的 React 组件骨架。接下来,我们将添加状态管理。然后,我们将集成 API 调用。”每个提示都建立在前一个的基础上。通常不建议一次性请求整个大型功能;相反,应从一个高层次目标开始,然后逐步请求每个部分。这种方法不仅使 AI 的响应更集中、更易管理,也模拟了人类逐步构建解决方案的过程。Include examples of inputs/outputs or expected behavior. If you can illustrate what you want with an example, do it. For example, “Given the array [3,1,4], this function should return [1,3,4].” Providing a concrete example in the prompt helps the AI understand your intent and reduces ambiguity . It’s akin to giving a junior developer a quick test case – it clarifies the requirements. In prompt engineering terms, this is sometimes called “few-shot prompting,” where you show the AI a pattern to follow. Even one example of correct behavior can guide the model’s response significantly.

包含输入/输出示例或预期行为。如果你能用一个例子说明你的需求,请务必这样做。例如,“给定数组 [3,1,4],该函数应返回 [1,3,4]。”在提示中提供具体示例有助于 AI 理解你的意图,减少歧义。这就像给初级开发者一个快速测试用例——它明确了需求。在提示工程术语中,这有时被称为“少量示例提示”,即向 AI 展示一个模式。即使只有一个正确行为的示例,也能显著引导模型的响应。Leverage roles or personas. A powerful technique popularized in many viral prompt examples is to ask the AI to “act as” a certain persona or role. This can influence the style and depth of the answer. For instance, “Act as a senior React developer and review my code for potential bugs” or “You are a JavaScript performance expert. Optimize the following function.” By setting a role, you prime the assistant to adopt the relevant tone – whether it’s being a strict code reviewer, a helpful teacher for a junior dev, or a security analyst looking for vulnerabilities. Community-shared prompts have shown success with this method, such as “Act as a JavaScript error handler and debug this function for me. The data isn’t rendering properly from the API call.” . In our own usage, we must still provide the code and problem details, but the role-play prompt can yield more structured and expert-level guidance.

利用角色或身份。一个在许多流行提示示例中广泛使用的强大技巧是让 AI“扮演”某个特定的角色或身份。这可以影响回答的风格和深度。例如,“扮演一名高级 React 开发者,帮我检查代码中的潜在错误”或“你是一名 JavaScript 性能专家,优化以下函数。”通过设定角色,你可以引导助手采用相应的语气——无论是严格的代码审查者、对初级开发者有帮助的教师,还是寻找漏洞的安全分析师。社区共享的提示已经证明了这种方法的成功,比如“扮演 JavaScript 错误处理器,帮我调试这个函数。API 调用的数据没有正确渲染。”在我们自己的使用中,仍需提供代码和问题细节,但角色扮演的提示可以带来更有结构和专业水平的指导。

Iterate and refine the conversation. Prompt engineering is an interactive process, not a one-shot deal. Developers often need to review the AI’s first answer and then ask follow-up questions or make corrections. If the solution isn’t quite right, you might say, “That solution uses recursion, but I’d prefer an iterative approach – can you try again without recursion?” Or, “Great, now can you improve the variable names and add comments?” The AI remembers the context in a chat session, so you can progressively steer it to the desired outcome. The key is to view the AI as a partner you can coach – progress over perfection on the first try .

反复迭代并完善对话。提示工程是一个互动过程,而非一次性完成。开发者通常需要审查 AI 的首次回答,然后提出后续问题或进行修正。如果解决方案不太合适,你可以说:“这个方案用了递归,但我更喜欢迭代方法——你能不使用递归再试一次吗?”或者,“很好,现在你能改进变量名并添加注释吗?”AI 会记住聊天会话中的上下文,因此你可以逐步引导它达到理想结果。关键是将 AI 视为一个可以指导的伙伴——重在进步,而非第一次就完美。Maintain code clarity and consistency. This last principle is a bit indirect but very important for tools that work on your code context. Write clean, well-structured code and comments, even before the AI comes into play. Meaningful function and variable names, consistent formatting, and docstrings not only make your code easier to understand for humans, but also give the AI stronger clues about what you’re doing. If you show a consistent pattern or style, the AI will continue it . Treat these tools as extremely attentive junior developers – they take every cue from your code and comments.

保持代码的清晰和一致性。最后这一原则有些间接,但对于处理你代码上下文的工具来说非常重要。在 AI 介入之前,就要编写干净、结构良好的代码和注释。具有意义的函数和变量名、一致的格式以及文档字符串不仅使你的代码更易于人类理解,也为 AI 提供了更强的线索,帮助它理解你的意图。如果你展示出一致的模式或风格,AI 也会继续保持。把这些工具当作极其细心的初级开发者——它们会从你的代码和注释中捕捉每一个提示。

With these foundational principles in mind, let’s dive into specific scenarios. We’ll start with debugging, perhaps the most immediate use-case: you have code that’s misbehaving, and you want the AI to help figure out why.

牢记这些基本原则,让我们深入具体场景。我们将从调试开始,这也许是最直接的用例:你的代码出现了问题,你希望 AI 帮助找出原因。

Prompt patterns for debugging code

调试代码的提示模式

Debugging is a natural fit for an AI assistant. It’s like having a rubber-duck that not only listens, but actually talks back with suggestions. However, success largely depends on how you present the problem to the AI. Here’s how to systematically prompt for help in finding and fixing bugs:

调试非常适合由 AI 助手来完成。这就像拥有一个不仅会倾听,还会用建议回应的橡皮鸭。然而,成功很大程度上取决于你如何向 AI 呈现问题。以下是系统地提示 AI 帮助查找和修复错误的方法:

1. Clearly describe the problem and symptoms. Begin your prompt by describing what is going wrong and what the code is supposed to do. Always include the exact error message or incorrect behavior. For example, instead of just saying “My code doesn’t work,” you might prompt: “I have a function in JavaScript that should calculate the sum of an array of numbers, but it’s returning NaN (Not a Number) instead of the actual sum. Here is the code: [include code]. It should output a number (the sum) for an array of numbers like [1,2,3], but I’m getting NaN. What could be the cause of this bug?” This prompt specifies the language, the intended behavior, the observed wrong output, and provides the code context – all crucial information. Providing a structured context (code + error + expected outcome + what you’ve tried) gives the AI a solid starting point . By contrast, a generic question like “Why isn’t my function working?” yields meager results – the model can only offer the most general guesses without context.

1. 清楚描述问题和症状。开始提问时,说明出现了什么问题以及代码应该实现的功能。务必包含准确的错误信息或不正确的行为。例如,不要仅仅说“我的代码不起作用”,而是可以这样提问:“我有一个 JavaScript 函数,应该计算一个数字数组的和,但它返回了 NaN(不是数字),而不是实际的和。代码如下:[包含代码]。它应该对像 [1,2,3] 这样的数字数组输出一个数字(和),但我得到的是 NaN。这个错误可能的原因是什么?”这个提问明确了语言、预期行为、观察到的错误输出,并提供了代码内容——这些都是关键信息。提供结构化的信息(代码 + 错误 + 预期结果 + 你尝试过的内容)能为 AI 提供坚实的起点。相比之下,像“为什么我的函数不起作用?”这样泛泛的问题,得到的结果很有限——模型只能给出最笼统的猜测,缺乏具体上下文。

2. Use a step-by-step or line-by-line approach for tricky bugs. For more complex logic bugs (where no obvious error message is thrown but the output is wrong), you can prompt the AI to walk through the code’s execution. For instance: “Walk through this function line by line and track the value of total at each step. It’s not accumulating correctly – where does the logic go wrong?” This is an example of a rubber duck debugging prompt – you’re essentially asking the AI to simulate the debugging process a human might do with prints or a debugger. Such prompts often reveal subtle issues like variables not resetting or incorrect conditional logic, because the AI will spell out the state at each step. If you suspect a certain part of the code, you can zoom in: “Explain what the filter call is doing here, and if it might be excluding more items than it should.” Engaging the AI in an explanatory role can surface the bug in the process of explanation.

2. 对于棘手的错误,采用逐步或逐行的方法。对于更复杂的逻辑错误(没有明显的错误信息但输出错误),你可以让 AI 逐步执行代码。例如:“逐行走查这个函数,跟踪每一步中 total 的值。它没有正确累加——逻辑哪里出错了?”这是橡皮鸭调试提示的一个例子——你实际上是在让 AI 模拟人类通过打印或调试器进行调试的过程。这类提示常常能揭示细微的问题,比如变量未重置或条件逻辑错误,因为 AI 会详细说明每一步的状态。如果你怀疑代码的某个部分,可以放大细节:“解释这里的 filter 调用在做什么,它是否可能排除了比应排除更多的项。”让 AI 扮演解释者的角色,可以在解释过程中发现错误。

3. Provide minimal reproducible examples when possible. Sometimes your actual codebase is large, but the bug can be demonstrated in a small snippet. If you can extract or simplify the code that still reproduces the issue, do so and feed that to the AI. This not only makes it easier for the AI to focus, but also forces you to clarify the problem (often a useful exercise in itself). For example, if you’re getting a TypeError in a deeply nested function call, try to reproduce it with a few lines that you can share. Aim to isolate the bug with the minimum code, make an assumption about what’s wrong, test it, and iterate . You can involve the AI in this by saying: “Here’s a pared-down example that still triggers the error [include snippet]. Why does this error occur?” By simplifying, you remove noise and help the AI pinpoint the issue. (This technique mirrors the advice of many senior engineers: if you can’t immediately find a bug, simplify the problem space. The AI can assist in that analysis if you present a smaller case to it.)

3. 尽可能提供最小可复现的示例。有时你的实际代码库很大,但错误可以通过一小段代码演示。如果你能提取或简化仍能复现问题的代码,请这样做并提供给 AI。这不仅让 AI 更容易集中注意力,也迫使你澄清问题(这本身往往是一个有益的练习)。例如,如果你在深层嵌套的函数调用中遇到 TypeError,尝试用几行代码复现并分享。目标是用最少的代码隔离错误,假设问题所在,进行测试并反复迭代。你可以这样让 AI 参与:“这是一个简化的示例,仍然会触发错误[包含代码片段]。为什么会出现这个错误?”通过简化,你去除了干扰,帮助 AI 准确定位问题。(这一技巧与许多资深工程师的建议相似:如果你无法立即找到错误,就简化问题空间。如果你向 AI 提供一个更小的案例,它可以协助分析。)

4. Ask focused questions and follow-ups. After providing context, it’s often effective to directly ask what you need, for example: “What might be causing this issue, and how can I fix it?” . This invites the AI to both diagnose and propose a solution. If the AI’s first answer is unclear or partially helpful, don’t hesitate to ask a follow-up. You could say, “That explanation makes sense. Can you show me how to fix the code? Please provide the corrected code.” In a chat setting, the AI has the conversation history, so it can directly output the modified code. If you’re using an inline tool like Copilot in VS Code or Cursor without a chat, you might instead write a comment above the code like // BUG: returns NaN, fix this function and see how it autocompletes – but in general, the interactive chat yields more thorough explanations. Another follow-up pattern: if the AI gives a fix but you don’t understand why, ask “Can you explain why that change solves the problem?” This way you learn for next time, and you double-check that the AI’s reasoning is sound.

4. 提出有针对性的问题和后续问题。在提供背景信息后,直接询问你需要的内容通常更有效,例如:“可能导致这个问题的原因是什么,我该如何修复?”这会引导 AI 既进行诊断又提出解决方案。如果 AI 的第一个回答不清楚或部分有帮助,不要犹豫,继续追问。你可以说:“这个解释很有道理。你能告诉我如何修复代码吗?请提供修改后的代码。”在聊天环境中,AI 拥有对话历史,因此可以直接输出修改后的代码。如果你使用的是像 VS Code 中的 Copilot 或 Cursor 这类没有聊天功能的内联工具,你可以在代码上方写注释,比如 // BUG: 返回 NaN,修复此函数,看看它如何自动补全——但总体来说,交互式聊天能提供更详尽的解释。另一种后续提问方式是:如果 AI 给出了修复方案但你不理解原因,可以问“你能解释为什么这个改动能解决问题吗?”这样你可以为下次学习,同时也能核实 AI 的推理是否合理。

Now, let’s illustrate these debugging prompt principles with a concrete example, showing a poor prompt vs. improved prompt and the difference in AI responses:

现在,让我们通过一个具体的例子来说明这些调试提示原则,展示一个糟糕的提示与改进后的提示以及 AI 响应的差异:

Debugging example: poor vs. improved prompt

调试示例:糟糕的提示与改进的提示

Imagine we have a simple Node.js function meant to convert a list of user objects to a lookup map by user ID. However, it’s throwing an error. Here’s the buggy code:

假设我们有一个简单的 Node.js 函数,旨在将用户对象列表转换为按用户 ID 索引的映射表。然而,它抛出了一个错误。以下是有问题的代码:

// Buggy function: converts array of users to a map by ID

function mapUsersById(users) {

const userMap = {};

for (let i = 0; i <= users.length; i++) {

// Note: using <= will go out of bounds on the last iteration

const user = users[i];

userMap[user.id] = user;

}

return userMap;

}

// Example usage:

const result = mapUsersById([{ id: 1, name: "Alice" }]);This code is supposed to iterate through the users array and build an object where keys are user IDs. However, there is a bug: the loop uses i <= users.length instead of i < users.length. This will cause users[i] to be undefined on the last iteration, likely leading to a runtime error (e.g., attempting to access property id of undefined). Let’s see how an AI assistant might respond to two different prompts about this issue.

这段代码应该遍历 users 数组并构建一个以用户 ID 为键的对象。然而,存在一个错误:循环使用了 i <= users.length 而不是 i < users.length。这会导致在最后一次迭代时 users[i] 为 undefined,可能引发运行时错误(例如,尝试访问 undefined 的 id 属性)。让我们看看 AI 助手如何针对这个问题的两个不同提示做出回应。

❌ Poor Prompt: “Why isn’t my mapUsersById function working?”

❌ 不良提示:“为什么我的 mapUsersById 函数不起作用?”

AI’s response to the poor prompt (simulated):

AI 对不良提示的回应(模拟):

It’s hard to say without more information. Are you sure the function is being called with a proper array of users? Perhaps the issue is that users is empty or not an array, causing the loop not to execute properly. It could also be that there’s an error when setting the property on userMap. Make sure that each user has an id property. If users is undefined or null, that would also cause it to not work.

没有更多信息很难判断。你确定函数是用一个正确的用户数组调用的吗?问题可能是 users 为空或不是数组,导致循环无法正常执行。也可能是在设置 userMap 属性时出现了错误。确保每个用户都有一个 id 属性。如果 users 是未定义或 null,也会导致函数无法工作。

Analysis: This answer is quite vague. The prompt gave no details except the function name, so the AI is guessing at generic causes (“maybe the array is empty or not an array”, “make sure each user has an id”). It did not identify the actual bug. This is the kind of response you get when the question is underspecified – the model can only regurgitate common debugging tips. As a developer, this isn’t very helpful because it doesn’t address the real mistake. The lack of context and specificity in the prompt yields a generic answer (essentially the AI equivalent of “have you tried turning it off and on again?”). This aligns with the community advice that vague prompts lead to irrelevant or generic answers .

分析:这个回答相当模糊。提示中除了函数名外没有提供任何细节,所以 AI 只能猜测一些通用原因(“可能数组是空的或者不是数组”,“确保每个用户都有 id”)。它并没有找出实际的错误。这种回答通常出现在问题描述不充分的情况下——模型只能重复常见的调试建议。作为开发者,这并不太有帮助,因为它没有解决真正的错误。提示中缺乏具体信息和细节,导致回答泛泛而谈(本质上相当于 AI 版的“你试过关机重启吗?”)。这与社区的建议一致,即模糊的提示会导致无关或泛泛的回答。

✅ Improved Prompt: “I have a JavaScript function mapUsersById that should convert an array of user objects into a map (object) keyed by user ID. However, it throws an error when I run it. For example, when I pass [ {id: 1, name: "Alice"} ], I get TypeError: Cannot read property 'id' of undefined. Here is the function code:

✅ 改进后的提示:“我有一个 JavaScript 函数 mapUsersById,应该将用户对象数组转换成以用户 ID 为键的映射(对象)。但是运行时会报错。例如,当我传入[ {id: 1, name: "Alice"} ]时,出现 TypeError: Cannot read property 'id' of undefined。以下是函数代码:

function mapUsersById(users) {

const userMap = {};

for (let i = 0; i <= users.length; i++) {

const user = users[i];

userMap[user.id] = user;

}

return userMap;

}It should return { "1": {id: 1, name: "Alice"} }. What is the bug and how can I fix it?”

它应该返回 { "1": {id: 1, name: "Alice"} }。是什么错误,我该如何修复?

AI’s response to the improved prompt (simulated):

AI 对改进后的提示的回应(模拟):

*The error Cannot read property 'id' of undefined suggests that at some point, user is undefined, and then you try to access user.id. Looking at your loop, the issue is with the loop bounds. You are using i <= users.length. If users.length is 1 (for one user in the array), the loop runs with i = 0 and i = 1. When i is 1, users[1] is undefined (since the only index in the array is 0). Thus user becomes undefined on that iteration, and user.id throws a TypeError. The fix is to change the loop condition to i < users.length so that i goes from 0 to users.length-1. For example:

*错误“Cannot read property 'id' of undefined”表明在某些时候,user 是未定义的,然后你尝试访问 user.id。查看你的循环,问题出在循环边界。你使用的是 i <= users.length。如果 users.length 是 1(数组中有一个用户),循环会在 i = 0 和 i = 1 时运行。当 i 为 1 时,users[1]是未定义的(因为数组中唯一的索引是 0)。因此,在那次迭代中 user 变成了未定义,user.id 会抛出 TypeError。解决方法是将循环条件改为 i < users.length,这样 i 就从 0 到 users.length-1。例如:

for (let i = 0; i < users.length; i++) {

const user = users[i];

userMap[user.id] = user;

}This way, i will be 0 for the first (and only) element, and then the loop stops. With that change, your function will no longer hit an undefined user and should work as expected, returning {"1": {id: 1, name: "Alice"}} for the given input.*

这样,i 在第一个(也是唯一一个)元素时为 0,然后循环停止。通过这个修改,你的函数将不再遇到未定义的 user,应该能按预期工作,返回{"1": {id: 1, name: "Alice"}}。*

Analysis: This response directly pinpoints the bug (using <= in the loop) and explains why it causes user to be undefined. It then provides the correct code solution (i < users.length) and confirms the expected outcome. The improved prompt succeeded because it followed our principles: it specified the language (JavaScript), described the function’s purpose, included the exact error message and a sample input, and even provided the code snippet in question. Notice how the AI used the error message as a clue to focus on the loop bounds – a targeted prompt enabled the AI to engage in true problem-solving, effectively simulating how a human debugger would think: “where could undefined come from? likely from the loop indexing”. This is a concrete demonstration of the benefit of detailed prompts.

分析:该回答直接指出了错误(循环中使用了 <=),并解释了为什么会导致 user 未定义。随后提供了正确的代码解决方案(i < users.length),并确认了预期的结果。改进后的提示成功是因为它遵循了我们的原则:指定了语言(JavaScript),描述了函数的用途,包含了准确的错误信息和示例输入,甚至提供了相关的代码片段。注意 AI 如何利用错误信息作为线索,聚焦于循环边界——有针对性的提示使 AI 能够进行真正的问题解决,有效地模拟了人类调试者的思考方式:“未定义可能来自哪里?很可能是循环索引”。这具体展示了详细提示的好处。

Additional Debugging Tactics: Beyond identifying obvious bugs, you can use prompt engineering for deeper debugging assistance:

额外的调试策略:除了识别明显的错误外,你还可以利用提示工程获得更深入的调试帮助:

Ask for potential causes. If you’re truly stumped, you can broaden the question slightly: “What are some possible reasons for a TypeError: cannot read property 'foo' of undefined in this code?” along with the code. The model might list a few scenarios (e.g. the object wasn’t initialized, a race condition, wrong variable scoping, etc.). This can give you angles to investigate that you hadn’t considered. It’s like brainstorming with a colleague.

询问潜在原因。如果你真的感到困惑,可以稍微扩大问题范围:“在这段代码中,导致 TypeError: cannot read property 'foo' of undefined 的可能原因有哪些?”并附上代码。模型可能会列出一些情景(例如对象未初始化、竞态条件、变量作用域错误等)。这可以为你提供一些之前未考虑过的调查角度,就像与同事头脑风暴一样。“Ask the Rubber Duck” – i.e., explain your code to the AI. This may sound counterintuitive (why explain to the assistant?), but the act of writing an explanation can clarify your own understanding, and you can then have the AI verify or critique it. For example: “I will explain what this function is doing: [your explanation]. Given that, is my reasoning correct and does it reveal where the bug is?” The AI might catch a flaw in your explanation that points to the actual bug. This technique leverages the AI as an active rubber duck that not only listens but responds.

“问橡皮鸭”——即向 AI 解释你的代码。这听起来可能有些反直觉(为什么要向助手解释?),但写下解释的过程可以澄清你自己的理解,然后你可以让 AI 验证或批评它。例如:“我将解释这个函数的作用:[你的解释]。基于此,我的推理是否正确,是否揭示了错误所在?”AI 可能会发现你解释中的缺陷,从而指出实际的错误。这个技巧利用了 AI 作为一个不仅倾听还会回应的主动橡皮鸭。Have the AI create test cases. You can ask: “Can you provide a couple of test cases (inputs) that might break this function?” The assistant might come up with edge cases you didn’t think of (empty array, extremely large numbers, null values, etc.). This is useful both for debugging and for generating tests for future robustness.

让 AI 创建测试用例。你可以问:“你能提供几个可能会让这个函数出错的测试用例(输入)吗?”助手可能会想出你没想到的边界情况(空数组、极大数值、空值等)。这对于调试和生成未来的稳健性测试都很有用。Role-play a code reviewer. As an alternative to a direct “debug this” prompt, you can say: “Act as a code reviewer. Here’s a snippet that isn’t working as expected. Review it and point out any mistakes or bad practices that could be causing issues: [code]”. This sets the AI into a critical mode. Many developers find that phrasing the request as a code review yields a very thorough analysis, because the model will comment on each part of the code (and often, in doing so, it spots the bug). In fact, one prompt engineering tip is to explicitly request the AI to behave like a meticulous reviewer . This can surface not only the bug at hand but also other issues (e.g. potential null checks missing) which might be useful.

扮演代码审查员的角色。作为直接“调试这个”提示的替代方案,你可以说:“扮演代码审查员的角色。这是一个未按预期工作的代码片段。请审查并指出可能导致问题的错误或不良实践:[代码]”。这会让 AI 进入一种批判模式。许多开发者发现,将请求表述为代码审查会产生非常彻底的分析,因为模型会对代码的每个部分进行评论(通常在此过程中发现了错误)。事实上,一个提示工程技巧是明确要求 AI 表现得像一个细致的审查员。这不仅能发现当前的错误,还可能揭示其他问题(例如可能缺失的空值检查),这可能很有用。

In summary, when debugging with an AI assistant, detail and direction are your friends. Provide the scenario, the symptoms, and then ask pointed questions. The difference between a flailing “it doesn’t work, help!” prompt and a surgical debugging prompt is night and day, as we saw above. Next, we’ll move on to another major use case: refactoring and improving existing code.

总之,在使用 AI 助手调试时,细节和方向是你的好帮手。提供场景、症状,然后提出具体的问题。正如我们上面看到的,漫无目的的“它不工作,帮帮我!”的提示和精准的调试提示之间的差别天壤之别。接下来,我们将进入另一个主要用例:重构和改进现有代码。

Prompt patterns for refactoring and optimization

重构与优化的提示模式

Refactoring code – making it cleaner, faster, or more idiomatic without changing its functionality – is an area where AI assistants can shine. They’ve been trained on vast amounts of code, which includes many examples of well-structured, optimized solutions. However, to tap into that knowledge effectively, your prompt must clarify what “better” means for your situation. Here’s how to prompt for refactoring tasks:

重构代码——在不改变其功能的前提下,使其更简洁、更快速或更符合惯用表达——是人工智能助手能够大显身手的领域。它们经过大量代码的训练,其中包含许多结构良好、优化完善的示例。然而,要有效利用这些知识,您的提示必须明确“更好”在您的具体情况下意味着什么。以下是进行重构任务的提示方法:

1. State your refactoring goals explicitly. “Refactor this code” on its own is too open-ended. Do you want to improve readability? Reduce complexity? Optimize performance? Use a different paradigm or library? The AI needs a target. A good prompt frames the task, for example: “Refactor the following function to improve its readability and maintainability (reduce repetition, use clearer variable names).” Or “Optimize this algorithm for speed – it’s too slow on large inputs.” By stating specific goals, you help the model decide which transformations to apply . For instance, telling it you care about performance might lead it to use a more efficient sorting algorithm or caching, whereas focusing on readability might lead it to break a function into smaller ones or add comments. If you have multiple goals, list them out. A prompt template from the Strapi guide suggests even enumerating issues: “Issues I’d like to address: 1) [performance issue], 2) [code duplication], 3) [outdated API usage].” . This way, the AI knows exactly what to fix. Remember, it will not inherently know what you consider a problem in the code – you must tell it.

1. 明确说明你的重构目标。“重构这段代码”本身过于宽泛。你是想提高可读性吗?减少复杂度?优化性能?使用不同的范式或库?AI 需要一个明确的目标。一个好的提示会框定任务,例如:“重构以下函数以提高其可读性和可维护性(减少重复,使用更清晰的变量名)。”或者“优化此算法以提升速度——它在处理大输入时太慢。”通过明确具体目标,你帮助模型决定应用哪些变换。例如,告诉它你关心性能,可能会让它使用更高效的排序算法或缓存,而关注可读性则可能让它将函数拆分成更小的部分或添加注释。如果你有多个目标,请列出来。Strapi 指南中的一个提示模板甚至建议列举问题:“我想解决的问题:1)[性能问题],2)[代码重复],3)[过时的 API 使用]。”这样,AI 就能准确知道要修复什么。记住,它不会自动知道你认为代码中的问题是什么——你必须告诉它。

2. Provide the necessary code context. When refactoring, you’ll typically include the code snippet that needs improvement in the prompt. It’s important to include the full function or section that you want to be refactored, and sometimes a bit of surrounding context if relevant (like the function’s usage or related code, which could affect how you refactor). Also mention the language and framework, because “idiomatic” code varies between, say, idiomatic Node.js vs. idiomatic Deno, or React class components vs. functional components. For example: “I have a React component written as a class. Please refactor it to a functional component using Hooks.” The AI will then apply the typical steps (using useState, useEffect, etc.). If you just said “refactor this React component” without clarifying the style, the AI might not know you specifically wanted Hooks.

2. 提供必要的代码上下文。在重构时,通常需要在提示中包含需要改进的代码片段。重要的是要包含你想要重构的完整函数或代码段,有时如果相关的话,也可以包括一些周边内容(比如函数的使用方式或相关代码,这可能会影响你的重构方式)。还要说明使用的语言和框架,因为“惯用”代码在不同环境中有所不同,比如惯用的 Node.js 与惯用的 Deno,或者 React 的类组件与函数组件。例如:“我有一个用类写的 React 组件,请将其重构为使用 Hooks 的函数组件。”AI 会按照典型步骤进行重构(使用 useState、useEffect 等)。如果你只是说“重构这个 React 组件”,而没有说明风格,AI 可能不知道你特别想要使用 Hooks。

Include version or environment details if relevant. For instance, “This is a Node.js v14 codebase” or “We’re using ES6 modules”. This can influence whether the AI uses certain syntax (like import/export vs. require), which is part of a correct refactoring. If you want to ensure it doesn’t introduce something incompatible, mention your constraints.

如果相关,请包含版本或环境细节。例如,“这是一个 Node.js v14 代码库”或“我们使用的是 ES6 模块”。这会影响 AI 是否使用某些语法(如 import/export 与 require),这是正确重构的一部分。如果你想确保它不会引入不兼容的内容,请说明你的限制条件。

3. Encourage explanations along with the code. A great way to learn from an AI-led refactor (and to verify its correctness) is to ask for an explanation of the changes. For example: “Please suggest a refactored version of the code, and explain the improvements you made.” This was even built into the prompt template we referenced: “…suggest refactored code with explanations for your changes.” . When the AI provides an explanation, you can assess if it understood the code and met your objectives. The explanation might say: “I combined two similar loops into one to reduce duplication, and I used a dictionary for faster lookups,” etc. If something sounds off in the explanation, that’s a red flag to examine the code carefully. In short, use the AI’s ability to explain as a safeguard – it’s like having the AI perform a code review on its own refactor.

3. 鼓励在代码的同时提供解释。从 AI 主导的重构中学习(并验证其正确性)的一个好方法是请求对更改进行解释。例如:“请建议一个重构后的代码版本,并解释你所做的改进。”这甚至被内置在我们参考的提示模板中:“……建议带有更改解释的重构代码。”当 AI 提供解释时,你可以评估它是否理解了代码并达到了你的目标。解释可能会说:“我将两个相似的循环合并为一个以减少重复,并使用字典来加快查找速度,”等等。如果解释中有不妥之处,那就是一个需要仔细检查代码的警示信号。简而言之,利用 AI 的解释能力作为保障——这就像让 AI 对其自身的重构进行代码审查。

4. Use role-play to set a high standard. As mentioned earlier, asking the AI to act as a code reviewer or senior engineer can be very effective. For refactoring, you might say: “Act as a seasoned TypeScript expert and refactor this code to align with best practices and modern standards.” This often yields not just superficial changes, but more insightful improvements because the AI tries to live up to the “expert” persona. A popular example from a prompt guide is having the AI role-play a mentor: “Act like an experienced Python developer mentoring a junior. Provide explanations and write docstrings. Rewrite the code to optimize it.” . The result in that case was that the AI used a more efficient data structure (set to remove duplicates) and provided a one-line solution for a function that originally used a loop . The role-play helped it not only refactor but also explain why the new approach is better (in that case, using a set is a well-known optimization for uniqueness).

4. 使用角色扮演来设定高标准。如前所述,让 AI 扮演代码审查员或高级工程师的角色非常有效。对于重构,你可以说:“扮演一位经验丰富的 TypeScript 专家,重构这段代码,使其符合最佳实践和现代标准。”这通常不仅带来表面的改动,还能带来更有见地的改进,因为 AI 会努力符合“专家”角色的期望。一个流行的示例是让 AI 扮演导师角色:“扮演一位有经验的 Python 开发者,指导初级开发者。提供解释并编写文档字符串。重写代码以进行优化。”结果是,AI 使用了更高效的数据结构(使用 set 来去重),并为原本使用循环的函数提供了一行代码的解决方案。角色扮演不仅帮助它完成了重构,还解释了为什么新方法更好(在这种情况下,使用 set 是众所周知的去重优化)。

Now, let’s walk through an example of refactoring to see how a prompt can influence the outcome. We will use a scenario in JavaScript (Node.js) where we have some less-than-ideal code and we want it improved.

现在,让我们通过一个重构的例子来看看提示如何影响结果。我们将使用一个 JavaScript(Node.js)场景,其中有一些不太理想的代码,我们希望对其进行改进。

Refactoring example: poor vs. improved prompt

重构示例:差的提示与改进的提示

Suppose we have a function that makes two database calls and does some processing. It works, but it’s not pretty – there’s duplicated code and it’s hard to read. We’d like to refactor it for clarity and efficiency. Here’s the original code:

假设我们有一个函数,它进行了两次数据库调用并做了一些处理。它能工作,但不够美观——代码重复且难以阅读。我们希望对其进行重构,以提高清晰度和效率。以下是原始代码:

// Original function: Fetches two lists and processes them (needs refactoring)

async function getCombinedData(apiClient) {

// Fetch list of users

const usersResponse = await apiClient.fetch('/users');

if (!usersResponse.ok) {

throw new Error('Failed to fetch users');

}

const users = await usersResponse.json();

// Fetch list of orders

const ordersResponse = await apiClient.fetch('/orders');

if (!ordersResponse.ok) {

throw new Error('Failed to fetch orders');

}

const orders = await ordersResponse.json();

// Combine data (match user to orders)

const result = [];

for (let user of users) {

const userOrders = orders.filter(o => o.userId === user.id);

result.push({ user, orders: userOrders });

}

return result;

}Issues in this code: We see duplicated fetch logic for users and orders (could be refactored into a helper or loop), lack of error detail (just a generic error message), and it could perhaps run the fetches in parallel to improve speed. Let’s see two prompting approaches:

这段代码的问题:我们看到用户和订单的获取逻辑重复(可以重构为辅助函数或循环),缺乏错误细节(只有通用的错误信息),并且可以考虑并行执行获取操作以提高速度。让我们看看两种提示方法:

❌ Prompt 1 (Underspecified): “Refactor the above getCombinedData function.”

❌ 提示 1(描述不充分):“重构上述的 getCombinedData 函数。”

AI’s response to Prompt 1:

AI 对提示 1 的回应:

*Sure. Here’s a refactored version of getCombinedData:

*好的。以下是重构后的 getCombinedData 函数版本:

async function getCombinedData(apiClient) {

// Fetch users and orders in parallel

const [usersResponse, ordersResponse] = await Promise.all([

apiClient.fetch('/users'),

apiClient.fetch('/orders')

]);

if (!usersResponse.ok || !ordersResponse.ok) {

throw new Error('Failed to fetch data');

}

const [users, orders] = await Promise.all([

usersResponse.json(),

ordersResponse.json()

]);

// Map orders by userId for efficient lookup

const ordersByUser = {};

for (const order of orders) {

if (!ordersByUser[order.userId]) {

ordersByUser[order.userId] = [];

}

ordersByUser[order.userId].push(order);

}

// Combine data

return users.map(user => ({

user,

orders: ordersByUser[user.id] || []

}));

}I fetched users and orders in parallel to improve performance. I also consolidated error handling and used a map (ordersByUser) to group orders by user, which makes the combination step cleaner and potentially faster.*

我并行获取了用户和订单以提升性能。我还整合了错误处理,并使用了一个映射(ordersByUser)按用户分组订单,这使得合并步骤更简洁且可能更快。

Analysis: This is actually a pretty good refactor, hitting several improvements: parallel fetching, grouping orders by user for efficiency, cleaner combination using .map and defaulting to an empty array. The AI also explained what it did. So why do we label this prompt as underspecified or “poor”? In this case, the AI happened to make good choices, but it assumed a lot because the prompt was open-ended. It assumed that performance should be improved (parallel requests, which is a smart change but what if parallel wasn’t safe here?), and it changed error handling to a generic message “Failed to fetch data,” which in combining the errors lost the specificity of which call failed.

分析:这实际上是一个相当不错的重构,实现了几个改进:并行获取、按用户分组订单以提高效率、使用 .map 进行更清晰的组合,并默认返回空数组。AI 还解释了它所做的操作。那么,为什么我们会将这个提示标记为不明确或“差”呢?在这种情况下,AI 恰好做出了不错的选择,但它做了很多假设,因为提示是开放式的。它假设应该提升性能(并行请求,这是一个聪明的改动,但如果这里并行不安全怎么办?),并且它将错误处理改为通用消息“获取数据失败”,在合并错误时丢失了具体是哪个调用失败的细节。

The risk with a generic “Refactor this” prompt is that the AI might make changes that alter behavior in unintended ways. Here, combining error handling might be acceptable or not, depending on requirements. The assistant also made a structural change (using a dictionary to group orders) – that’s fine and probably for the better, but if we had specific constraints (like wanting to stream results or keep memory usage low), we didn’t inform it. So while the result looks good, it was somewhat lucky that the AI’s own interpretation of “refactor” aligned with what we wanted. This illustrates that an underspecified prompt can sometimes get good results on simple cases, but for complex code, it might go off in an undesired direction or miss certain improvements you care about.

使用通用的“重构这个”提示的风险在于,AI 可能会做出改变行为的修改,而这些修改可能是无意的。在这里,合并错误处理可能是可接受的,也可能不可接受,这取决于具体需求。助手还做了结构上的改变(使用字典来分组订单)——这没问题,可能还更好,但如果我们有特定的限制(比如希望流式处理结果或保持低内存使用),我们并没有告知它。因此,虽然结果看起来不错,但 AI 对“重构”的理解恰好符合我们的期望,这有点幸运。这说明,提示不够具体时,在简单情况下有时能得到不错的结果,但对于复杂代码,可能会偏离预期方向或错过你关心的某些改进。

✅ Prompt 2 (Goal-Oriented): “Refactor the above getCombinedData function to eliminate duplicate code and improve performance. Specifically: (1) Avoid repeating the fetch logic for users and orders – maybe use a helper or fetch them together. (2) Fetch both lists in parallel if possible. (3) Keep the error handling for each fetch (we want to know which call failed). (4) Improve the combination of data, possibly by using a more efficient structure for lookup instead of a nested loop. Provide the refactored code with comments explaining the changes.”

✅ 提示 2(目标导向):“重构上述 getCombinedData 函数,消除重复代码并提升性能。具体要求:(1)避免重复获取用户和订单的逻辑——可以使用辅助函数或一起获取;(2)如果可能,尽量并行获取两个列表;(3)保留每次获取的错误处理(我们需要知道哪个调用失败了);(4)改进数据合并方式,可能通过使用更高效的查找结构替代嵌套循环。请提供带有注释说明更改的重构代码。”

AI’s response to Prompt 2:

用户:

将以下内容翻译成简体中文:

AI 对提示 2 的回复:

*Sure! Here’s an improved version of getCombinedData with the requested refactoring:

*当然!这是经过请求重构后改进的 getCombinedData 版本:

async function getCombinedData(apiClient) {

// 1. Fetch users and orders in parallel to improve performance

const [usersResponse, ordersResponse] = await Promise.all([

apiClient.fetch('/users'),

apiClient.fetch('/orders')

]);

// 2. Handle errors separately to know which call failed

if (!usersResponse.ok) {

throw new Error('Failed to fetch users');

}

if (!ordersResponse.ok) {

throw new Error('Failed to fetch orders');

}

// Parse JSON responses in parallel

const [users, orders] = await Promise.all([

usersResponse.json(),

ordersResponse.json()

]);

// 3. Use a lookup map for orders by userId for efficient matching

const ordersByUser = orders.reduce((map, order) => {

if (!map[order.userId]) {

map[order.userId] = [];

}

map[order.userId].push(order);

return map;

}, {});

// 4. Combine data using the lookup map

return users.map(user => ({

user,

orders: ordersByUser[user.id] || [] // empty array if no orders for user

}));

}Explanation of changes: 变更说明:

Parallel fetching: I used Promise.all to fetch users and orders at the same time (point 1).

并行获取:我使用了 Promise.all 同时获取用户和订单(要点 1)。Preserved individual error handling: I kept separate checks for usersResponse.ok and ordersResponse.ok so that any error clearly indicates which fetch failed (point 2).

保留了单独的错误处理:我分别检查了 usersResponse.ok 和 ordersResponse.ok,这样任何错误都能清楚地表明是哪个请求失败了(要点 2)。Parallel JSON parsing: Also used Promise.all for response.json() calls, which is a minor improvement.

并行 JSON 解析:还使用了 Promise.all 来处理 response.json() 调用,这是一个小的改进。Efficient combination: Instead of a nested loop, I created an ordersByUser map (point 3). This groups orders by userId in a single pass. Then I map over users to build the result, pulling each user’s orders from the map in O(1) time (point 4). This is more efficient and concise.*

高效组合:我没有使用嵌套循环,而是创建了一个 ordersByUser 映射(第 3 点)。这在一次遍历中按 userId 对订单进行了分组。然后我遍历用户来构建结果,从映射中以 O(1) 时间获取每个用户的订单(第 4 点)。这样更高效且简洁。

Analysis: This response directly addressed all the specified goals. The code is refactored to be cleaner and faster, and it maintained separate error messages as requested. The AI’s explanation confirms each point we listed, which shows it carefully followed the prompt instructions. This is a great outcome because we, as the prompter, defined what “refactor” meant in this context. By doing so, we guided the AI to produce a solution that matches our needs with minimal back-and-forth. If the AI had overlooked one of the points (say it still merged the error handling), we could easily prompt again: “Looks good, but please ensure the error messages remain distinct for users vs orders.” – however, in this case it wasn’t needed because our prompt was thorough.

分析:该回复直接解决了所有指定目标。代码被重构得更简洁、更快,并且保持了请求中要求的独立错误信息。AI 的解释确认了我们列出的每一点,表明它认真遵循了提示指令。这是一个很好的结果,因为作为提示者的我们定义了“重构”在此上下文中的含义。通过这样做,我们引导 AI 生成了符合我们需求的解决方案,减少了反复沟通。如果 AI 忽略了某一点(比如仍然合并了错误处理),我们可以轻松再次提示:“看起来不错,但请确保用户和订单的错误信息保持区分。”——不过在本例中不需要,因为我们的提示非常全面。

This example demonstrates a key lesson: when you know what you want improved, spell it out. AI is good at following instructions, but it won’t read your mind. A broad “make this better” might work for simple things, but for non-trivial code, you’ll get the best results by enumerating what “better” means to you. This aligns with community insights that clear, structured prompts yield significantly improved results .

这个例子展示了一个关键的教训:当你知道想要改进什么时,要明确表达。人工智能擅长遵循指令,但它不会读心术。一个笼统的“让它更好”可能适用于简单的事情,但对于非简单的代码,列举“更好”对你来说意味着什么,才能获得最佳效果。这与社区的见解一致,即清晰、有结构的提示能显著提升结果。

Additional Refactoring Tips:

额外的重构建议:

Refactor in steps: If the code is very large or you have a long list of changes, you can tackle them one at a time. For example, first ask the AI to “refactor for readability” (focus on renaming, splitting functions), then later “optimize the algorithm in this function.” This prevents overwhelming the model with too many instructions at once and lets you verify each change stepwise.

分步骤重构:如果代码非常庞大或有一长串修改项,可以一次处理一部分。例如,先让 AI“为了可读性进行重构”(重点是重命名、拆分函数),然后再“优化这个函数中的算法”。这样可以避免一次给模型过多指令,同时让你逐步验证每次修改。Ask for alternative approaches: Maybe the AI’s first refactor works but you’re curious about a different angle. You can ask, “Can you refactor it in another way, perhaps using functional programming style (e.g. array methods instead of loops)?” or “How about using recursion here instead of iterative approach, just to compare?” This way, you can evaluate different solutions. It’s like brainstorming multiple refactoring options with a colleague.

询问替代方案:也许 AI 的第一次重构已经有效,但你想了解不同的思路。你可以问:“你能用另一种方式重构吗,比如使用函数式编程风格(例如用数组方法代替循环)?”或者“这里用递归代替迭代方法怎么样,仅作比较?”这样你可以评估不同的解决方案,就像和同事一起头脑风暴多种重构选项。Combine refactoring with explanation to learn patterns: We touched on this, but it’s worth emphasizing – use the AI as a learning tool. If it refactors code in a clever way, study the output and explanation. You might discover a new API or technique (like using reduce to build a map) that you hadn’t used before. This is one reason to ask for explanations: it turns an answer into a mini-tutorial, reinforcing your understanding of best practices.

结合重构与解释来学习模式:我们已经提到过这一点,但值得强调——将 AI 作为学习工具使用。如果它以巧妙的方式重构代码,仔细研究输出和解释。你可能会发现之前未使用过的新 API 或技术(比如使用 reduce 来构建映射)。这也是请求解释的一个原因:它将答案变成一个迷你教程,加强你对最佳实践的理解。Validation and testing: After any AI-generated refactor, always run your tests or try the code with sample inputs. AI might inadvertently introduce subtle bugs, especially if the prompt didn’t specify an important constraint. For example, in our refactor, if the original code intentionally separated fetch errors for logging but we didn’t mention logging, the combined error might be less useful. It’s our job to catch that in review. The AI can help by writing tests too – you could ask “Generate a few unit tests for the refactored function” to ensure it behaves the same as before on expected inputs.

验证和测试:在任何 AI 生成的重构之后,务必运行测试或使用示例输入尝试代码。AI 可能无意中引入细微的错误,尤其是在提示中未指定重要约束的情况下。例如,在我们的重构中,如果原始代码有意将获取错误分开以便记录日志,但我们没有提及日志记录,合并后的错误可能就不那么有用了。发现这些问题是我们审查时的职责。AI 也可以通过编写测试来帮助——你可以请求“为重构后的函数生成几个单元测试”,以确保其在预期输入下的行为与之前相同。

At this point, we’ve covered debugging and refactoring – improving existing code. The next logical step is to use AI assistance for implementing new features or generating new code. We’ll explore how to prompt for that scenario effectively.

到目前为止,我们已经涵盖了调试和重构——改进现有代码。下一步的合理步骤是使用 AI 辅助来实现新功能或生成新代码。我们将探讨如何有效地为这种场景设计提示。

Modern debugging scenarios

现代调试场景

React Hook dependency issues

React Hook 依赖问题

❌ Poor Prompt: "My useEffect isn't working right"

❌ 不佳的提示:“我的 useEffect 不能正常工作”

✅ Enhanced Prompt: ✅ 增强提示:

I have a React component that fetches user data, but it's causing infinite re-renders. Here's my code:

const UserProfile = ({ userId }) => {

const [user, setUser] = useState(null);

const [loading, setLoading] = useState(true);

useEffect(() => {

fetchUser(userId).then(setUser).finally(() => setLoading(false));

}, [userId, setUser, setLoading]); // Problem is here

return loading ? <div>Loading...</div> : <div>{user?.name}</div>;

};Expected behavior: Should fetch user data once when userId changes Actual behavior: Component re-renders infinitely Error in console: "Warning: Maximum update depth exceeded"

预期行为:当 userId 变化时应只获取一次用户数据

实际行为:组件无限重新渲染

控制台错误:"警告:超过最大更新深度"

What's causing this infinite loop and how do I fix the dependency array?

是什么导致了这个无限循环,我该如何修复依赖数组?

Why this works: Provides exact code, error message, expected vs actual behavior, and focuses on a specific React pattern that's commonly misunderstood.

为什么这样有效:提供了准确的代码、错误信息、预期与实际行为的对比,并聚焦于一个常被误解的特定 React 模式。

Modern state architecture

现代状态架构

❌ Poor Prompt: "Build the state management for my Next.js ecommerce app”

❌ 不佳的提示:“为我的 Next.js 电子商务应用构建状态管理”

✅ Enhanced Prompt: ✅ 增强提示:

I'm building a Next.js 14 e-commerce app and need to design the state management architecture. Here are my requirements:

我正在构建一个 Next.js 14 电子商务应用,需要设计状态管理架构。以下是我的需求:

Components: 组件:

Product listing page (needs: products[], filters, pagination)

产品列表页面(需要:products[],过滤器,分页)Shopping cart (needs: cart items, totals, shipping info)

购物车(需要:购物车商品、总计、运输信息)User auth (needs: user profile, auth status, preferences)

用户认证(需要:用户资料、认证状态、偏好设置)Real-time notifications (needs: toast messages, error states)

实时通知(需求:吐司消息,错误状态)

Technical constraints: 技术限制:

Next.js 14 with App Router and Server Components

Next.js 14,使用 App Router 和服务器组件TypeScript strict mode TypeScript 严格模式

Server-side data fetching for SEO

服务器端数据获取以优化 SEOClient-side interactivity for cart/user actions

客户端交互用于购物车/用户操作State should persist across navigation

状态应在导航过程中保持

Should I use: 我应该使用:

Zustand stores for each domain (cart, auth, notifications)

每个领域(购物车、认证、通知)的 Zustand 存储器React Query/TanStack Query for server state + Zustand for client state

React Query/TanStack Query 用于服务器状态 + Zustand 用于客户端状态A single Zustand store with slices

带有切片的单个 Zustand 存储

Please provide a recommended architecture with code examples showing how to structure stores and integrate with Next.js App Router patterns.

请提供一个推荐的架构,并附带代码示例,展示如何构建存储以及如何与 Next.js App Router 模式集成。

Why this works: Real-world scenario with specific tech stack, clear requirements, and asks for architectural guidance with implementation details.

为什么这有效:真实场景,具体技术栈,明确需求,并且请求架构指导及实现细节。

Prompt patterns for implementing new features

实现新功能的提示模式

One of the most exciting uses of AI code assistants is to help you write new code from scratch or integrate a new feature into an existing codebase. This could range from generating a boilerplate for a React component to writing a new API endpoint in an Express app. The challenge here is often that these tasks are open-ended – there are many ways to implement a feature. Prompt engineering for code generation is about guiding the AI to produce code that fits your needs and style. Here are strategies to do that:

AI 代码助手最令人兴奋的用途之一是帮助你从零开始编写新代码或将新功能集成到现有代码库中。这可以包括生成 React 组件的模板代码,也可以是编写 Express 应用中的新 API 端点。这里的挑战通常在于这些任务是开放性的——实现一个功能有多种方式。代码生成的提示工程就是引导 AI 生成符合你需求和风格的代码。以下是实现这一目标的策略:

1. Start with high-level instructions, then drill down. Begin by outlining what you want to build in plain language, possibly breaking it into smaller tasks (similar to our advice on breaking down complex tasks earlier). For example, say you want to add a search bar feature to an existing web app. You might first prompt: “Outline a plan to add a search feature that filters a list of products by name in my React app. The products are fetched from an API.”

1. 从高层次的指令开始,然后逐步深入。首先用简单的语言概述你想要构建的内容,可能还会将其拆分成更小的任务(类似于我们之前关于拆解复杂任务的建议)。例如,假设你想在现有的网页应用中添加一个搜索栏功能。你可以先提示:“概述一个计划,在我的 React 应用中添加一个按名称过滤产品列表的搜索功能。产品数据是从 API 获取的。”

The AI might give you a step-by-step plan: “1. Add an input field for the search query. 2. Add state to hold the query. 3. Filter the products list based on the query. 4. Ensure it’s case-insensitive, etc.” Once you have this plan (which you can refine with the AI’s help), you can tackle each bullet with focused prompts.

AI 可能会给你一个逐步的计划:“1. 添加一个用于搜索查询的输入框。2. 添加状态以保存查询内容。3. 根据查询过滤产品列表。4. 确保搜索不区分大小写,等等。”一旦你有了这个计划(你可以借助 AI 进行完善),就可以针对每个要点进行有针对性的提示。

For instance: “Okay, implement step 1: create a SearchBar component with an input that updates a searchQuery state.” After that, “Implement step 3: given the searchQuery and an array of products, filter the products (case-insensitive match on name).” By dividing the feature, you ensure each prompt is specific and the responses are manageable. This also mirrors iterative development – you can test each piece as it’s built.

例如:“好的,执行步骤 1:创建一个带有输入框的 SearchBar 组件,该输入框会更新 searchQuery 状态。”之后,“执行步骤 3:根据 searchQuery 和产品数组,筛选产品(名称不区分大小写匹配)。”通过拆分功能,确保每个提示都具体且响应易于管理。这也反映了迭代开发——你可以在构建过程中测试每个部分。

2. Provide relevant context or reference code. If you’re adding a feature to an existing project, it helps tremendously to show the AI how similar things are done in that project. For example, if you already have a component that is similar to what you want, you can say: “Here is an existing UserList component (code…). Now create a ProductList component that is similar but includes a search bar.”

2. 提供相关的上下文或参考代码。如果你正在为现有项目添加功能,向 AI 展示该项目中类似功能的实现方式会非常有帮助。例如,如果你已经有一个与所需功能相似的组件,可以说:“这是一个现有的 UserList 组件(代码……)。现在创建一个类似的 ProductList 组件,但包含一个搜索栏。”

The AI will see the patterns (maybe you use certain libraries or style conventions) and apply them. Having relevant files open or referencing them in your prompt provides context that leads to more project-specific and consistent code suggestions . Another trick: if your project uses a particular coding style or architecture (say Redux for state or a certain CSS framework), mention that. “We use Redux for state management – integrate the search state into Redux store.”

AI 会识别模式(可能你使用了某些库或风格规范)并加以应用。打开相关文件或在提示中引用它们,可以提供信息,从而生成更符合项目特点且一致的代码建议。另一个技巧是:如果你的项目使用特定的编码风格或架构(比如用于状态管理的 Redux 或某个 CSS 框架),请提及它。“我们使用 Redux 进行状态管理——将搜索状态集成到 Redux 存储中。”

A well-trained model will then generate code consistent with Redux patterns, etc. Essentially, you are teaching the AI about your project’s environment so it can tailor the output. Some assistants can even use your entire repository as context to draw from; if using those, ensure you point it to similar modules or documentation in your repo.

经过良好训练的模型将生成符合 Redux 模式等的代码。本质上,您是在向 AI 传授有关您项目环境的信息,以便它能够定制输出。一些助手甚至可以使用您整个代码库作为参考;如果使用这些助手,请确保指向您代码库中类似的模块或文档。

If starting something new but you have a preferred approach, you can also mention that: “I’d like to implement this using functional programming style (no external state, using array methods).” Or, “Ensure to follow the MVC pattern and put logic in the controller, not the view.” These are the kind of details a senior engineer might remind a junior about, and here you are the senior telling the AI.

如果开始做新的事情,但你有一个偏好的方法,也可以提及:“我想用函数式编程风格来实现这个(无外部状态,使用数组方法)。”或者,“确保遵循 MVC 模式,把逻辑放在控制器中,而不是视图里。”这些都是资深工程师可能会提醒初级工程师的细节,而这里你就是那个告诉 AI 的资深工程师。

3. Use comments and TODOs as inline prompts. When working directly in an IDE with Copilot, one effective workflow is writing a comment that describes the next chunk of code you need, then letting the AI autocomplete it. For example, in a Node.js backend, you might write: // TODO: Validate the request payload (ensure name and email are provided) and then start the next line. Copilot often picks up on the intent and generates a block of code performing that validation. This works because your comment is effectively a natural language prompt. However, be prepared to edit the generated code if the AI misinterprets – as always, verify its correctness.

3. 使用注释和 TODO 作为内联提示。在 IDE 中直接使用 Copilot 时,一个有效的工作流程是写一条描述你需要的下一段代码的注释,然后让 AI 自动补全。例如,在 Node.js 后端,你可能会写:// TODO: 验证请求负载(确保提供了姓名和邮箱),然后开始写下一行代码。Copilot 通常能理解意图,生成执行该验证的代码块。这之所以有效,是因为你的注释实际上是一个自然语言提示。不过,如果 AI 理解有误,准备好编辑生成的代码——一如既往,务必验证其正确性。

4. Provide examples of expected input/output or usage. Similar to what we discussed before, if you’re asking the AI to implement a new function, include a quick example of how it will be used or a simple test case. For instance: “Implement a function formatPrice(amount) in JavaScript that takes a number (like 2.5) and returns a string formatted in USD (like $2.50). For example, formatPrice(2.5) should return '$2.50'.”

4. 提供预期输入/输出或用法的示例。类似于我们之前讨论的,如果你要求 AI 实现一个新函数,包含一个该函数如何使用的简短示例或一个简单的测试用例。例如:“用 JavaScript 实现一个函数 formatPrice(amount),该函数接收一个数字(如 2.5)并返回一个格式化为美元的字符串(如 $2.50)。例如,formatPrice(2.5) 应返回 '$2.50'。”

By giving that example, you constrain the AI to produce a function consistent with it. Without the example, the AI might assume some other formatting or currency. The difference could be subtle but important. Another example in a web context: “Implement an Express middleware that logs requests. For instance, a GET request to /users should log ‘GET /users’ to the console.” This makes it clear what the output should look like. Including expected behavior in the prompt acts as a test the AI will try to satisfy.

通过给出这个例子,你限制了 AI 生成与之相符的函数。没有这个例子,AI 可能会假设其他格式或货币。差异可能很微妙但很重要。另一个网络环境中的例子:“实现一个记录请求的 Express 中间件。例如,对 /users 的 GET 请求应在控制台记录 ‘GET /users’。”这明确了输出应是什么样子。在提示中包含预期行为,起到了 AI 会尝试满足的测试作用。

5. When the result isn’t what you want, rewrite the prompt with more detail or constraints. It’s common that the first attempt at generating a new feature doesn’t nail it. Maybe the code runs but is not idiomatic, or it missed a requirement. Instead of getting frustrated, treat the AI like a junior dev who gave a first draft – now you need to give feedback. For example, “The solution works but I’d prefer if you used the built-in array filter method instead of a for loop.” Or, “Can you refactor the generated component to use React Hooks for state instead of a class component? Our codebase is all functional components.” You can also add new constraints: “Also, ensure the function runs in O(n) time or better, because n could be large.” This iterative prompting is powerful. A real-world scenario: one developer asked an LLM to generate code to draw an ice cream cone using a JS canvas library, but it kept giving irrelevant output until they refined the prompt with more specifics and context . The lesson is, don’t give up after one try. Figure out what was lacking or misunderstood in the prompt and clarify it. This is the essence of prompt engineering – each tweak can guide the model closer to what you envision.

5. 当结果不是你想要的,重新编写提示,增加更多细节或限制条件。首次尝试生成新功能时常常不会完美。代码可能能运行,但不符合惯用写法,或者遗漏了某个需求。不要沮丧,把 AI 当作一个给出初稿的初级开发者——现在你需要给出反馈。例如,“解决方案可行,但我更希望你使用内置的数组 filter 方法,而不是 for 循环。”或者,“你能重构生成的组件,使用 React Hooks 来管理状态,而不是类组件吗?我们的代码库全部是函数组件。”你还可以添加新的限制:“另外,确保函数的运行时间是 O(n) 或更优,因为 n 可能很大。”这种迭代式提示非常强大。一个真实案例:一位开发者让 LLM 使用 JS 画布库生成绘制冰淇淋蛋筒的代码,但它不断输出无关内容,直到他们用更具体和有上下文的提示进行了细化。教训是,不要在一次尝试后放弃。找出提示中缺失或被误解的部分并加以澄清。 这就是提示工程的精髓——每一次调整都能引导模型更接近你所设想的结果。

Let’s illustrate feature implementation prompting with an example scenario:

让我们通过一个示例场景来说明功能实现提示:

Feature implementation example: building a React component with AI assistance

功能实现示例:在 AI 辅助下构建 React 组件

Suppose we have a React application and we want to add a new component: a product list with a search filter and a sort dropdown. This is a fairly typical feature in a web app. We’ll focus on prompting for the search functionality within this component for brevity.

假设我们有一个 React 应用程序,想要添加一个新组件:一个带有搜索过滤和排序下拉菜单的产品列表。这是在网页应用中相当典型的功能。为了简洁起见,我们将重点放在该组件中的搜索功能提示上。

Initial Prompt (Feature request): “Create a React functional component called ProductList that displays a list of products and includes a text input to filter the products by name. The component should: fetch an array of products from /api/products (assume it returns JSON array of {id, name, ...}), store them in state, and allow the user to type in a search box to filter the displayed products by name (case-insensitive match). Display the filtered list in a simple <ul> with product names. Include basic error handling (e.g. show an error message if the API call fails) and a loading state while fetching.”

初始提示(功能请求):“创建一个名为 ProductList 的 React 函数组件,显示产品列表,并包含一个文本输入框,用于按名称过滤产品。该组件应:从 /api/products 获取产品数组(假设返回 JSON 数组,格式为 {id, name, ...}),将其存储在状态中,并允许用户在搜索框中输入内容,以按名称过滤显示的产品(不区分大小写匹配)。以简单的 <ul> 显示过滤后的产品名称列表。包括基本的错误处理(例如,如果 API 调用失败则显示错误信息)和加载状态指示。”

That’s a long prompt but it’s specific. Let’s assume we give this to an LLM in a chat.

这是一个较长但具体的提示。假设我们将其提供给 LLM 进行对话。

AI’s response (summary of what it might produce): The AI would likely output a ProductList component something like:

用户:

翻译成简体中文(仅输出翻译内容):

AI 的回答(可能生成的摘要):AI 很可能会输出一个类似于 ProductList 组件的内容:

import React, { useState, useEffect } from 'react';

function ProductList() {

const [products, setProducts] = useState([]);

const [search, setSearch] = useState('');

const [loading, setLoading] = useState(true);

const [error, setError] = useState(null);

useEffect(() => {

async function fetchProducts() {

try {

const res = await fetch('/api/products');

if (!res.ok) throw new Error('Network response was not ok');

const data = await res.json();

setProducts(data);

} catch (err) {

console.error('Failed to fetch products:', err);

setError('Failed to load products.');

} finally {

setLoading(false);

}

}

fetchProducts();

}, []);

const handleSearchChange = (e) => {

setSearch(e.target.value);

};

// Filter products by search query (case-insensitive)

const filteredProducts = products.filter(product =>

product.name.toLowerCase().includes(search.toLowerCase())

);

if (loading) return <div>Loading products...</div>;

if (error) return <div>{error}</div>;

return (

<div className="product-list">

<input

type="text"

placeholder="Search products"

value={search}

onChange={handleSearchChange}

/>

<ul>

{filteredProducts.map(prod => (

<li key={prod.id}>{prod.name}</li>

))}

</ul>

</div>

);

}

export default ProductList;(Plus possibly some comments in the code explaining each part, if we asked for them.)

(如果我们要求的话,也可以在代码中添加一些解释每个部分的注释。)

Now, let’s analyze this result and consider prompt refinements:

现在,让我们分析这个结果并考虑提示的改进:

Suppose the above code came back, but our project uses a context or a global store for data fetching instead of fetching inside the component. This is something we didn’t specify. The AI made a choice to fetch in useEffect. If we prefer using a custom hook or context, we should have hinted at that. So we could refine our prompt: “Actually, in our app we use a custom hook useProducts() that already handles fetching. Please refactor the component to use useProducts hook instead of directly calling fetch.” The AI would then adjust, perhaps assuming useProducts returns { products, loading, error } and simplify the component accordingly.

假设上述代码返回了结果,但我们的项目使用的是上下文或全局存储来进行数据获取,而不是在组件内部进行获取。这是我们没有说明的。AI 选择在 useEffect 中进行获取。如果我们更倾向于使用自定义钩子或上下文,应该提前提示。因此我们可以改进提示:“实际上,在我们的应用中,我们使用了一个自定义钩子 useProducts(),它已经处理了数据获取。请重构组件,改用 useProducts 钩子,而不是直接调用 fetch。”AI 随后会进行调整,可能假设 useProducts 返回{ products, loading, error },并相应地简化组件。Another refinement: maybe we realize we also want a sort dropdown (which we didn’t mention initially). We can now extend the conversation: “Great, now add a dropdown to sort the products by name (A-Z or Z-A). The dropdown should let the user choose ascending or descending, and the list should sort accordingly in addition to the filtering.” Because the AI has the context of the existing code, it can insert a sort state and adjust the rendering. We provided a clear new requirement, and it will attempt to fulfill it, likely by adding something like:

另一个改进:也许我们意识到还需要一个排序下拉菜单(之前没有提到)。我们现在可以扩展对话:“很好,现在添加一个下拉菜单,根据名称对产品进行排序(A-Z 或 Z-A)。下拉菜单应允许用户选择升序或降序,列表应在过滤的基础上进行相应排序。”由于 AI 了解现有代码的情况,它可以插入排序状态并调整渲染。我们提供了一个明确的新需求,它会尝试实现,可能会添加类似的内容:

const [sortOrder, setSortOrder] = useState('asc');

// ... a select input for sortOrder ...

// and sort the filteredProducts before rendering:

const sortedProducts = [...filteredProducts].sort((a, b) => {

if (sortOrder === 'asc') return a.name.localeCompare(b.name);

else return b.name.localeCompare(a.name);

});(plus the dropdown UI). (加上下拉菜单界面)。

By iterating like this, feature by feature, we simulate a development cycle with the AI. This is far more effective than trying to prompt for the entire, complex component with all features in one go initially. It reduces mistakes and allows mid-course corrections as requirements become clearer.

通过这样逐个功能地迭代,我们模拟了与 AI 的开发周期。这比一开始就试图一次性提示包含所有功能的复杂组件要有效得多。它减少了错误,并允许在需求变得更清晰时进行中途调整。If the AI makes a subtle mistake (say it forgot to make the search filter case-insensitive), we just point that out: “Make the search case-insensitive.” It will adjust the filter to use lowercase comparison (which in our pseudo-output it already did, but if not it would fix it).

如果 AI 出现了一个细微的错误(比如忘记让搜索过滤器不区分大小写),我们只需指出:“让搜索不区分大小写。” 它会调整过滤器以使用小写比较(在我们的伪输出中它已经这样做了,但如果没有,它会修正)。

This example shows that implementing features with AI is all about incremental development and prompt refinement. A Twitter thread might exclaim how someone built a small app by continually prompting an LLM for each part – that’s essentially the approach: build, review, refine, extend. Each prompt is like a commit in your development process.

这个例子表明,使用 AI 实现功能完全是关于渐进式开发和提示优化的过程。一个 Twitter 帖子可能会惊叹某人如何通过不断地为每个部分提示 LLM 来构建一个小应用——这本质上就是方法:构建、审查、优化、扩展。每个提示就像你开发过程中的一次提交。

Additional tips for feature implementation:

实现功能的额外提示:

Let the AI scaffold, then you fill in specifics: Sometimes it’s useful to have the AI generate a rough structure, then you tweak it. For example, “Generate the skeleton of a Node.js Express route for user registration with validation and error handling.” It might produce a generic route with placeholders. You can then fill in the actual validation rules or database calls which are specific to your app. The AI saves you from writing boilerplate, and you handle the custom logic if it’s sensitive.

让 AI 搭建框架,然后你填充细节:有时让 AI 生成一个粗略结构,然后你进行调整是很有用的。例如,“生成一个用于用户注册的 Node.js Express 路由骨架,包含验证和错误处理。”它可能会生成一个带有占位符的通用路由。然后你可以填充实际的验证规则或数据库调用,这些是你应用特有的。AI 帮你省去了写样板代码的工作,而你负责处理敏感的自定义逻辑。Ask for edge case handling: When generating a feature, you might prompt the AI to think of edge cases: “What edge cases should we consider for this feature (and can you handle them in the code)?” For instance, in the search example, an edge case might be “what if the products haven’t loaded yet when the user types?” (though our code handles that via loading state) or “what if two products have the same name” (not a big issue but maybe mention it). The AI could mention things like empty result handling, very large lists (maybe needing debounce for search input), etc. This is a way to leverage the AI’s training on common pitfalls.

询问边界情况处理:在生成一个功能时,你可以提示 AI 考虑边界情况:“我们应该考虑这个功能的哪些边界情况(你能在代码中处理它们吗)?”例如,在搜索的例子中,一个边界情况可能是“用户输入时产品还没加载完成怎么办?”(虽然我们的代码通过加载状态处理了这个问题)或者“如果两个产品同名怎么办”(这不是大问题,但可以提及)。AI 可能会提到诸如空结果处理、非常大的列表(可能需要对搜索输入进行防抖处理)等。这是一种利用 AI 对常见陷阱训练的方式。Documentation-driven development: A nifty approach some have taken is writing a docstring or usage example first and having the AI implement the function to match. For example:

文档驱动开发:一些人采用的一种巧妙方法是先编写文档字符串或使用示例,然后让 AI 实现与之匹配的函数。例如:

/**

* Returns the nth Fibonacci number.

* @param {number} n - The position in Fibonacci sequence (0-indexed).

* @returns {number} The nth Fibonacci number.

*

* Example: fibonacci(5) -> 5 (sequence: 0,1,1,2,3,5,…)

*/

function fibonacci(n) {

// ... implementation

}If you write the above comment and function signature, an LLM might fill in the implementation correctly because the comment describes exactly what to do and even gives an example. This technique ensures you clarify the feature in words first (which is a good practice generally), and then the AI uses that as the spec to write the code.

如果你写出上述注释和函数签名,LLM 可能会正确地填写实现,因为注释准确描述了要做什么,甚至给出了示例。这种技巧确保你首先用文字澄清功能(这通常是一个好习惯),然后 AI 将其作为规范来编写代码。

Having covered prompting strategies for debugging, refactoring, and new code generation, let’s turn our attention to some common pitfalls and anti-patterns in prompt engineering for coding. Understanding these will help you avoid wasting time on unproductive interactions and quickly adjust when the AI isn’t giving you what you need.

在介绍了用于调试、重构和新代码生成的提示策略后,让我们将注意力转向编码提示工程中的一些常见陷阱和反模式。了解这些内容将帮助你避免在无效的交互中浪费时间,并在 AI 未能满足你的需求时迅速调整。

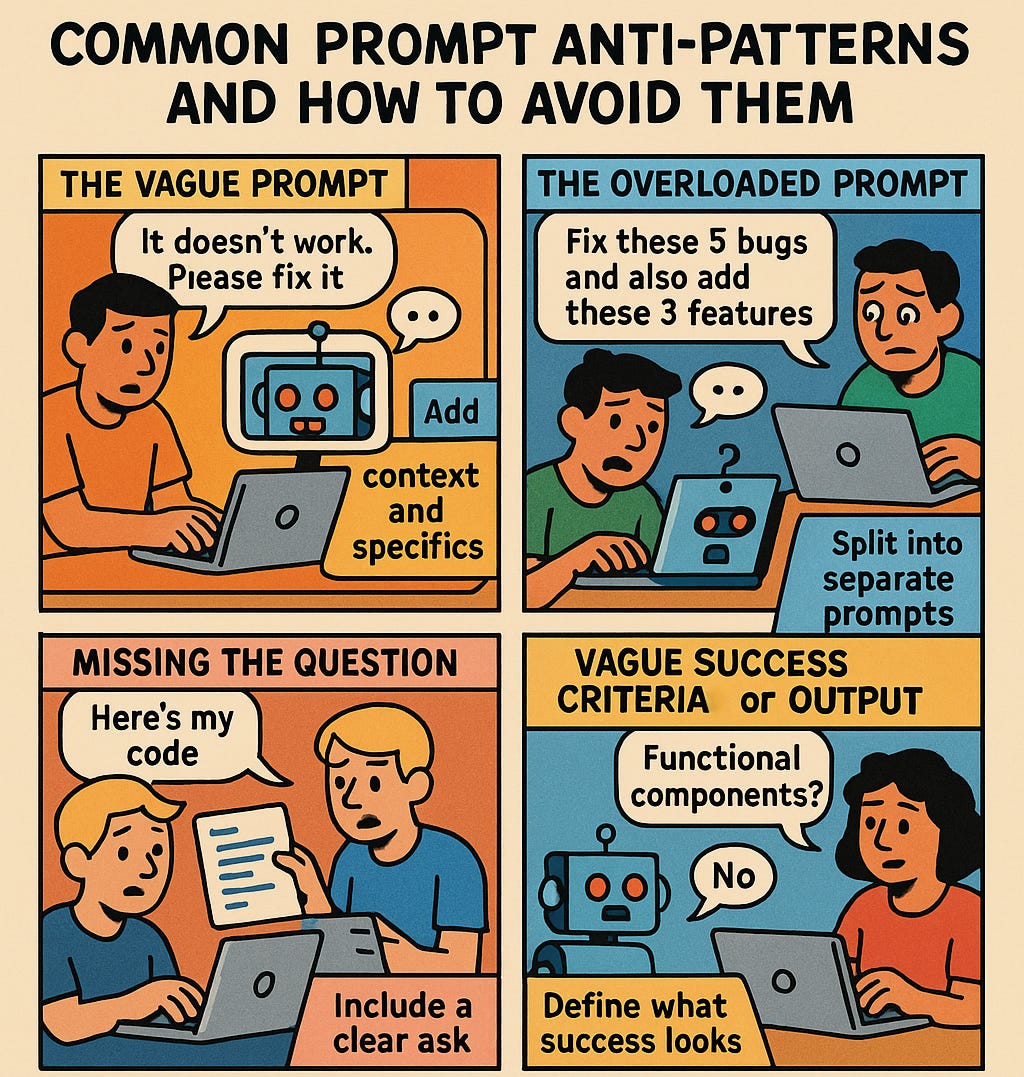

Common prompt anti-Patterns and how to avoid them

常见的提示反模式及其避免方法

Not all prompts are created equal. By now, we’ve seen numerous examples of effective prompts, but it’s equally instructive to recognize anti-patterns – common mistakes that lead to poor AI responses.

并非所有提示词都是平等的。到目前为止,我们已经见过许多有效提示词的例子,但同样有必要认识到反模式——那些导致 AI 响应不佳的常见错误。

Here are some frequent prompt failures and how to fix them:

以下是一些常见的提示失败及其解决方法:

Anti-Pattern: The Vague Prompt. This is the classic “It doesn’t work, please fix it” or “Write something that does X” without enough detail. We saw an example of this when the question “Why isn’t my function working?” got a useless answer . Vague prompts force the AI to guess the context and often result in generic advice or irrelevant code. The fix is straightforward: add context and specifics. If you find yourself asking a question and the answer feels like a Magic 8-ball response (“Have you tried checking X?”), stop and reframe your query with more details (error messages, code excerpt, expected vs actual outcome, etc.). A good practice is to read your prompt and ask, “Could this question apply to dozens of different scenarios?” If yes, it’s too vague. Make it so specific that it could only apply to your scenario.

反模式:模糊提示。这是经典的“它不起作用,请修复”或“写一些能做 X 的东西”,但没有足够的细节。我们看到一个例子,当问题是“为什么我的函数不起作用?”时,得到了无用的回答。模糊的提示迫使 AI 猜测上下文,常常导致泛泛的建议或无关的代码。解决方法很简单:添加上下文和具体细节。如果你发现自己在提问时,得到的回答像魔术 8 号球的回应(“你试过检查 X 吗?”),那就停下来,用更多细节重新构造你的问题(错误信息、代码片段、预期与实际结果等)。一个好习惯是读一遍你的提示,问自己:“这个问题是否适用于几十种不同的场景?”如果是,那就太模糊了。使其足够具体,以至于只能适用于你的场景。Anti-Pattern: The Overloaded Prompt. This is the opposite issue: asking the AI to do too many things at once. For instance, “Generate a complete Node.js app with authentication, a front-end in React, and deployment scripts.” Or even on a smaller scale, “Fix these 5 bugs and also add these 3 features in one go.” The AI might attempt it, but you’ll likely get a jumbled or incomplete result, or it might ignore some parts of the request. Even if it addresses everything, the response will be long and harder to verify. The remedy is to split the tasks. Prioritize: do one thing at a time, as we emphasized earlier. This makes it easier to catch mistakes and ensures the model stays focused. If you catch yourself writing a paragraph with multiple “and” in the instructions, consider breaking it into separate prompts or sequential steps.

反模式:过载提示。这是相反的问题:一次让 AI 做太多事情。例如,“生成一个完整的带有身份验证的 Node.js 应用,前端使用 React,以及部署脚本。”或者即使在较小的范围内,“一次修复这 5 个错误并添加这 3 个功能。”AI 可能会尝试,但你很可能会得到混乱或不完整的结果,或者它可能忽略请求的某些部分。即使它处理了所有内容,响应也会很长且难以验证。解决方法是拆分任务。优先处理:一次做一件事,正如我们之前强调的。这使得更容易发现错误,并确保模型保持专注。如果你发现自己在指令中写了包含多个“和”的段落,考虑将其拆分为单独的提示或顺序步骤。Anti-Pattern: Missing the Question. Sometimes users will present a lot of information but never clearly ask a question or specify what they need. For example, dumping a large code snippet and just saying “Here’s my code.” This can confuse the AI – it doesn’t know what you want. Always include a clear ask, such as “Identify any bugs in the above code”, “Explain what this code does”, or “Complete the TODOs in the code”. A prompt should have a purpose. If you just provide text without a question or instruction, the AI might make incorrect assumptions (like summarizing the code instead of fixing it, etc.). Make sure the AI knows why you showed it some code. Even a simple addition like, “What’s wrong with this code?” or “Please continue implementing this function.” gives it direction.

反模式:缺少问题。有时用户会提供大量信息,但从未明确提出问题或说明他们需要什么。例如,直接丢出一大段代码片段,然后说“这是我的代码。”这会让 AI 感到困惑——它不知道你想要什么。始终包含一个明确的请求,比如“找出上述代码中的任何错误”、“解释这段代码的功能”或“完成代码中的待办事项”。提示应该有明确的目的。如果你只是提供文本而没有问题或指令,AI 可能会做出错误的假设(比如总结代码而不是修复它等)。确保 AI 知道你为什么展示这段代码。即使是简单的补充,比如“这段代码有什么问题?”或“请继续实现这个函数。”也能为它指明方向。Anti-Pattern: Vague Success Criteria. This is a subtle one – sometimes you might ask for an optimization or improvement, but you don’t define what success looks like. For example, “Make this function faster.” Faster by what metric? If the AI doesn’t know your performance constraints, it might micro-optimize something that doesn’t matter or use an approach that’s theoretically faster but practically negligible. Or “make this code cleaner” – “cleaner” is subjective. We dealt with this by explicitly stating goals like “reduce duplication” or “improve variable names” etc. The fix: quantify or qualify the improvement. E.g., “optimize this function to run in linear time (current version is quadratic)” or “refactor this to remove global variables and use a class instead.” Basically, be explicit about what problem you’re solving with the refactor or feature. If you leave it too open, the AI might solve a different problem than the one you care about.

反模式:模糊的成功标准。这是一个微妙的问题——有时你可能会要求优化或改进,但没有定义成功的标准。例如,“让这个函数更快。”更快是以什么指标衡量?如果 AI 不知道你的性能限制,它可能会对无关紧要的部分进行微观优化,或者采用理论上更快但实际上微不足道的方法。或者“让这段代码更干净”——“干净”是主观的。我们通过明确陈述目标来解决这个问题,比如“减少重复”或“改进变量命名”等。解决方法:量化或限定改进。例如,“优化此函数使其运行时间为线性(当前版本是二次方)”或“重构此代码,去除全局变量,改用类。”基本上,要明确你通过重构或功能解决的具体问题。如果你留得太开放,AI 可能会解决与你关心的问题不同的问题。Anti-Pattern: Ignoring AI’s Clarification or Output. Sometimes the AI might respond with a clarifying question or an assumption. For instance: “Are you using React class components or functional components?” or “I assume the input is a string – please confirm.” If you ignore these and just reiterate your request, you’re missing an opportunity to improve the prompt. The AI is signaling that it needs more info. Always answer its questions or refine your prompt to include those details. Additionally, if the AI’s output is clearly off (like it misunderstood the question), don’t just retry the same prompt verbatim. Take a moment to adjust your wording. Maybe your prompt had an ambiguous phrase or omitted something essential. Treat it like a conversation – if a human misunderstood, you’d explain differently; do the same for the AI.

反模式:忽视 AI 的澄清或输出。有时 AI 可能会以澄清性问题或假设作出回应。例如:“你使用的是 React 类组件还是函数组件?”或者“我假设输入是字符串——请确认。”如果你忽略这些,只是一味重复你的请求,就错失了改进提示的机会。AI 正在提示它需要更多信息。务必回答它的问题,或调整你的提示以包含这些细节。此外,如果 AI 的输出明显有误(比如误解了问题),不要只是逐字重复同一个提示。花点时间调整你的措辞。也许你的提示中有模糊的表达或遗漏了某些关键内容。把它当作一次对话——如果是人类误解了,你会换种方式解释;对 AI 也应如此。Anti-Pattern: Varying Style or Inconsistency. If you keep changing how you ask or mixing different formats in one go, the model can get confused. For example, switching between first-person and third-person in instructions, or mixing pseudocode with actual code in a confusing way. Try to maintain a consistent style within a single prompt. If you provide examples, ensure they are clearly delineated (use Markdown triple backticks for code, quotes for input/output examples, etc.). Consistency helps the model parse your intent correctly. Also, if you have a preferred style (say, ES6 vs ES5 syntax), consistently mention it, otherwise the model might suggest one way in one prompt and another way later.

反模式:风格多变或不一致。如果你不断改变提问方式或在一次输入中混合不同格式,模型可能会感到困惑。例如,在指令中切换第一人称和第三人称,或以混乱的方式混合伪代码和实际代码。尽量在单个提示中保持风格一致。如果提供示例,确保它们清晰区分(使用 Markdown 三重反引号表示代码,使用引号表示输入/输出示例等)。一致性有助于模型正确解析你的意图。此外,如果你有偏好的风格(比如 ES6 与 ES5 语法),请始终提及,否则模型可能在一个提示中建议一种方式,之后又建议另一种方式。Anti-Pattern: Vague references like “above code”. When using chat, if you say “the above function” or “the previous output”, be sure the reference is clear. If the conversation is long and you say “refactor the above code”, the AI might lose track or pick the wrong code snippet to refactor. It’s safer to either quote the code again or specifically name the function you want refactored. Models have a limited attention window, and although many LLMs can refer to prior parts of the conversation, giving it explicit context again can help avoid confusion. This is especially true if some time (or several messages) passed since the code was shown.

反模式:模糊的引用,如“上面的代码”。在使用聊天时,如果你说“上面的函数”或“之前的输出”,务必确保引用清晰。如果对话很长,你说“重构上面的代码”,AI 可能会丢失上下文或选择错误的代码片段进行重构。更安全的做法是重新引用代码,或者明确指出你想重构的函数名称。模型的注意力窗口有限,虽然许多 LLMs 可以参考对话的前部分内容,但再次提供明确的上下文有助于避免混淆。尤其是在代码展示后经过一段时间(或多条消息)时更是如此。

Finally, here’s a tactical approach to rewriting prompts when things go wrong:

最后,当出现问题时,这里有一种战术性的方法来重写提示:

Identify what was missing or incorrect in the AI’s response. Did it solve a different problem? Did it produce an error or a solution that doesn’t fit? For example, maybe you asked for a solution in TypeScript but it gave plain JavaScript. Or it wrote a recursive solution when you explicitly wanted iterative. Pinpoint the discrepancy.

找出 AI 回答中缺失或错误的部分。它是否解决了不同的问题?是否产生了错误或不合适的解决方案?例如,你可能要求用 TypeScript 写解决方案,但它却给出了纯 JavaScript 代码。或者你明确要求迭代解法,但它写了递归解法。找出这些不一致之处。Add or emphasize that requirement in a new prompt. You might say, “The solution should be in TypeScript, not JavaScript. Please include type annotations.” Or, “I mentioned I wanted an iterative solution – please avoid recursion and use a loop instead.” Sometimes it helps to literally use phrases like “Note:” or “Important:” in your prompt to highlight key constraints (the model doesn’t have emotions, but it does weigh certain phrasing as indicating importance). For instance: “Important: Do not use any external libraries for this.” or “Note: The code must run in the browser, so no Node-specific APIs.”.

在新的提示中添加或强调该要求。你可以说:“解决方案应使用 TypeScript,而非 JavaScript。请包含类型注解。”或者,“我提到过我想要一个迭代的解决方案——请避免递归,改用循环。”有时在提示中直接使用“注意:”或“重要:”这样的短语,有助于突出关键限制(模型没有情感,但会将某些措辞视为重要提示)。例如:“重要:请勿使用任何外部库。”或“注意:代码必须在浏览器中运行,因此不能使用 Node 特定的 API。”。Break down the request further if needed. If the AI repeatedly fails on a complex request, try asking for a smaller piece first. Or ask a question that might enlighten the situation: “Do you understand what I mean by X?” The model might then paraphrase what it thinks you mean, and you can correct it if it’s wrong. This is meta-prompting – discussing the prompt itself – and can sometimes resolve misunderstandings.

如有需要,可进一步细化请求。如果 AI 在处理复杂请求时反复失败,尝试先请求较小的部分。或者提出一个可能澄清情况的问题:“你明白我说的 X 是什么意思吗?”模型可能会复述它认为你的意思,你可以在它错误时进行纠正。这就是元提示——讨论提示本身——有时可以解决误解。Consider starting fresh if the thread is stuck. Sometimes after multiple tries, the conversation may reach a confused state. It can help to start a new session (or clear the chat history for a moment) and prompt from scratch with a more refined ask that you’ve formulated based on previous failures. The model doesn’t mind repetition, and a fresh context can eliminate any accumulated confusion from prior messages.

如果对话陷入僵局,考虑重新开始。有时经过多次尝试,谈话可能会变得混乱。此时,开启一个新会话(或暂时清除聊天记录)并从头开始,以基于之前的失败制定更精确的提问,会有所帮助。模型不介意重复,新的环境可以消除之前信息积累的任何混淆。

By being aware of these anti-patterns and their solutions, you’ll become much faster at adjusting your prompts on the fly. Prompt engineering for developers is very much an iterative, feedback-driven process (as any programming task is!). The good news is, you now have a lot of patterns and examples in your toolkit to draw from.

了解这些反模式及其解决方案后,您将能够更快地即时调整提示。对于开发者来说,提示工程本质上是一个迭代的、基于反馈的过程(就像任何编程任务一样!)。好消息是,您现在拥有了许多模式和示例,可以作为工具箱中的资源。

Conclusion 结论

Prompt engineering is a bit of an art and a bit of a science – and as we’ve seen, it’s quickly becoming a must-have skill for developers working with AI code assistants. By crafting clear, context-rich prompts, you essentially teach the AI what you need, just as you would onboard a human team member or explain a problem to a peer. Throughout this article, we explored how to systematically approach prompts for debugging, refactoring, and feature implementation:

提示工程既是一门艺术,也是一门科学——正如我们所见,它正迅速成为与 AI 代码助手合作的开发者必备的技能。通过设计清晰且富有上下文的提示,您实际上是在教 AI 您的需求,就像您引导新团队成员或向同事解释问题一样。本文中,我们探讨了如何系统地处理用于调试、重构和功能实现的提示:

We learned to feed the AI the same information you’d give a colleague when asking for help: what the code is supposed to do, how it’s misbehaving, relevant code snippets, and so on – thereby getting much more targeted help .

我们学会了向 AI 提供与向同事求助时相同的信息:代码应完成的功能、代码的异常表现、相关代码片段等——从而获得更有针对性的帮助。We saw the power of iterating with the AI, whether it’s stepping through a function’s logic line by line, or refining a solution through multiple prompts (like turning a recursive solution into an iterative one, then improving variable names) . Patience and iteration turn the AI into a true pair programmer rather than a one-shot code generator.

我们看到了与 AI 反复迭代的力量,无论是逐行分析函数的逻辑,还是通过多次提示来完善解决方案(比如将递归解决方案改为迭代方案,然后改进变量名)。耐心和反复迭代使 AI 成为真正的搭档程序员,而不是一次性代码生成器。We utilized role-playing and personas to up-level the responses – treating the AI as a code reviewer, a mentor, or an expert in a certain stack . This often produces more rigorous and explanation-rich outputs, which not only solve the problem but educate us in the process.

我们利用角色扮演和人物设定来提升回答质量——将 AI 视为代码审查员、导师或某个技术栈的专家。这通常会产生更严谨且富有解释性的输出,不仅解决问题,还在过程中教育我们。For refactoring and optimization, we emphasized defining what “good” looks like (be it faster, cleaner, more idiomatic, etc.) , and the AI showed that it can apply known best practices when guided (like parallelizing calls, removing duplication, handling errors properly). It’s like having access to the collective wisdom of countless code reviewers – but you have to ask the right questions to tap into it.

在重构和优化时,我们强调定义“好”的标准(无论是更快、更简洁、更符合习惯用法等),而且人工智能展示了在指导下能够应用已知的最佳实践(如并行调用、去除重复、正确处理错误)。这就像拥有无数代码审查者的集体智慧——但你必须提出正确的问题才能利用它。We also demonstrated building new features step by step with AI assistance, showing that even complex tasks can be decomposed and tackled one prompt at a time. The AI can scaffold boilerplate, suggest implementations, and even highlight edge cases if prompted – acting as a knowledgeable co-developer who’s always available.